What is Retrieval-Augmented Generation (RAG) ?

Retrieval-Augmented Generation (RAG) is a cutting-edge workflow that enhances Large Language Models (LLMs) by integrating external knowledge during runtime. Unlike traditional language models, which rely solely on pre-trained data (often outdated or limited), RAG dynamically retrieves relevant documents from a knowledge base—such as vector databases, document repositories, or APIs—to supplement its responses.

How does RAG works ?

- Retrieval : A query is sent to a retrieval system (e.g., FAISS, Chroma, Milvus, or Elasticsearch) to fetch relevant contextual documents.

- Augmentation: The retrieved documents are added as additional input to the generative model.

- Generation: A language model (like GPT, LLaMA, or Mistral) generates an answer using both the query and retrieved information.

Why Automate RAG Workflows ?

Pipelines help us define the flow of automated data into new insights, this can include ETL Pipelines, RAG Pipelines, etc. in multiple repetitive steps such as :

- Data Preprocessing and Embedding of documents

- Running the retrieval queries

- Handling API Calls to generative AI models

- Formating responses

- Monitoring and Debugging

These above given steps while manually executed takes more effort and are time consuming, Automation helps in :

- Reducing human effort

- Increasing speed and scalability

- Improving readability

Advantages of Implementing Bash Scripting for RAG Automation

- Lightweight & Efficient

- Seamless Integration with CLI Tools

- Easy Scheduling & Background Execution

- Secure & Customizable

PREREQUISITES

Basics of Bash Scripting

- Familiarity with Bash Scripting ( Loops, Variables, Terminal, Exception Handling, etc )

- Understanding of core concepts of RAG pipelines or system.

Required Tools and Dependencies

This guide is especially suitable for systems that support Arch Linux… remember that it works simultaneously on Windows 10/11 also.

A. Essential Linux Utilities : For fetching data from web , processing and cleaning data and for handling JSON data.

wget, curl, grep, awk, sed, jqB. AI & ML Dependencies :

- Python ( as a overall base language )

- LLM integration ( Open AI GPT, LLaMA, Mitrus )

- Pip and Virtualenv ( To manage dependencies )

- Vector Databases ( FIASS, Chroma, Milvus )

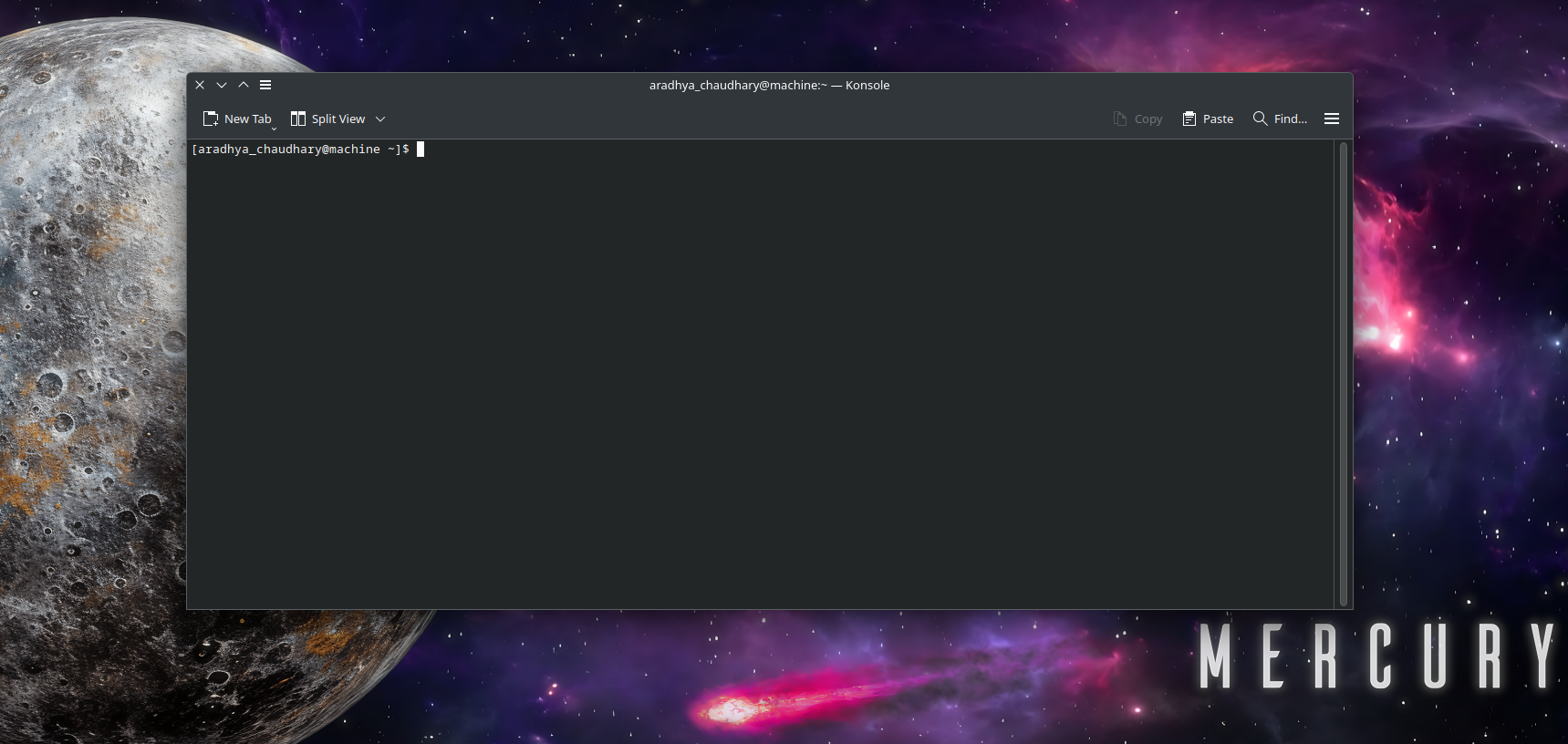

C. Arch Linux setup :

Go to your start or development menu then click terminal

Type the following command to install packages using pacman :

sudo pacman -S wget curl jq python

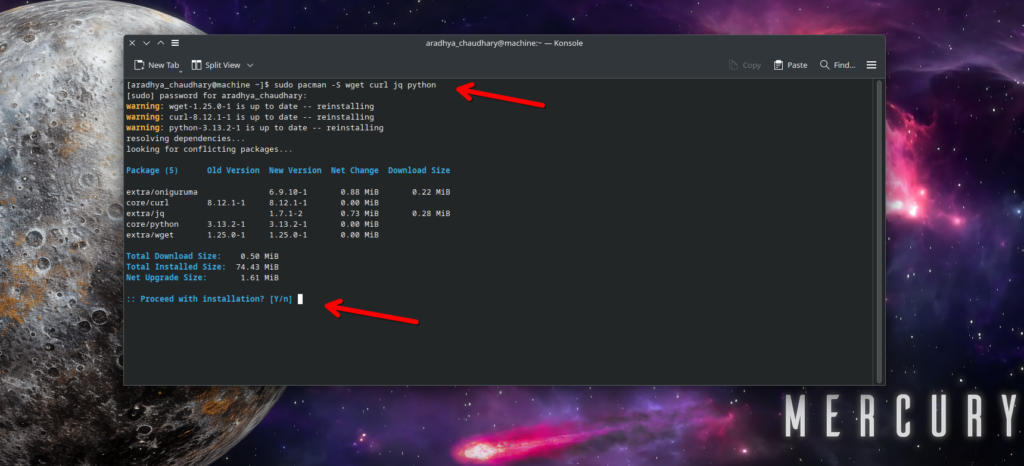

After running these steps the package will start installing and you must receive the outputs like this :

(Optional) Use AUR for additional tools like chromadb or faiss

SETTING UP RAG SYSTEM ( Arch Linux / Windows 10/11 )

Installing and Configuring Retrieval System

Retrieval system as I told before the the fetching of data from web and integrating it to our system directly by creating virtual environment. We will be using FAISS ( Facebook AI similarity Search ) and ChromDB both of which our efficient vector databases for storing and retrieving document

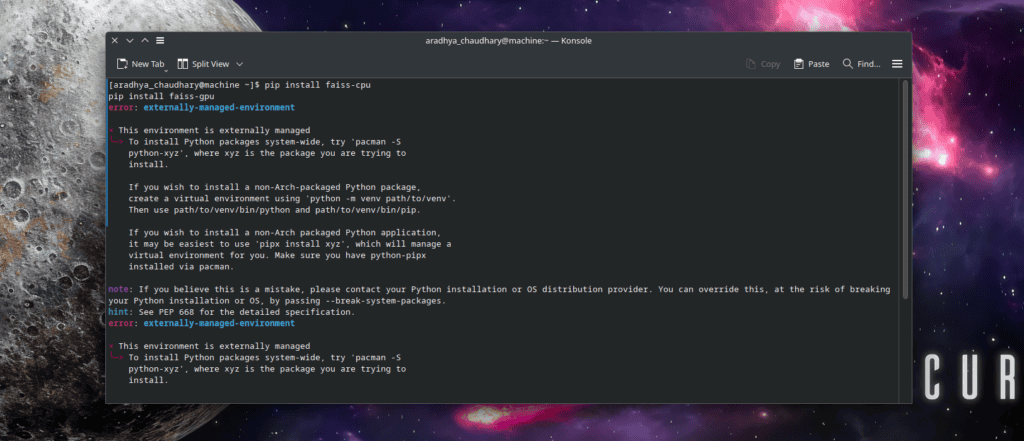

Installing FAISS on Arch Linux via Terminal :

pip install faiss-cpu # For CPU

pip install faiss-gpu # For GPU acceleration (if available)You might get error due to externally-managed-environment which may look like :

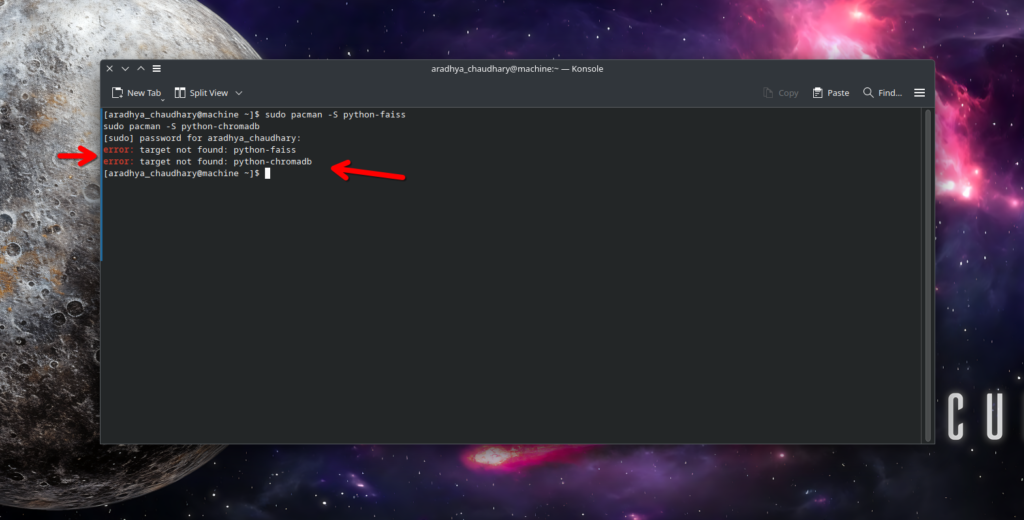

Use the pacman installation manager to install fiass and chromaDB by following commands :

sudo pacman -S python-faiss

sudo pacman -S python-chromadb

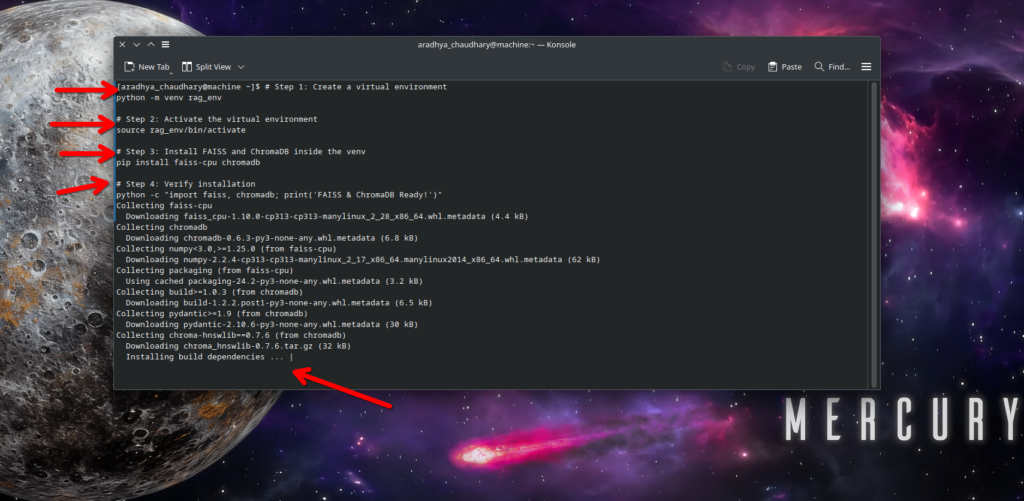

In case you get error again you can install it via virtual environment

# Step 1: Create a virtual environment

python -m venv rag_env

# Step 2: Activate the virtual environment

source rag_env/bin/activate

# Step 3: Install FAISS and ChromaDB inside the venv

pip install faiss-cpu chromadb

# Step 4: Verify installation

python -c "import faiss, chromadb; print('FAISS & ChromaDB Ready!')"

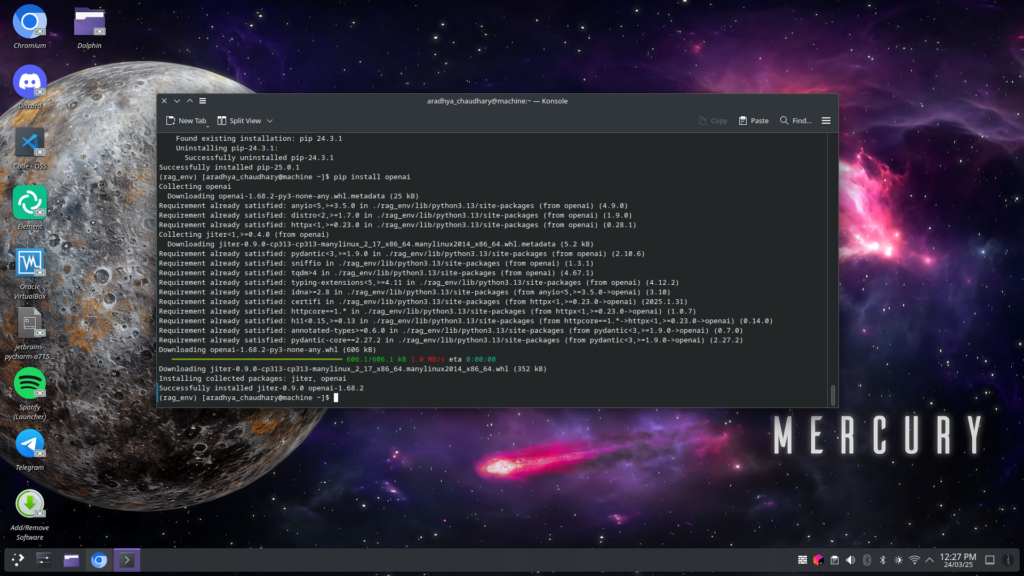

Once you paste the above commands in terminal it will start collecting FIASS and chromaDB dependencies and start building them :

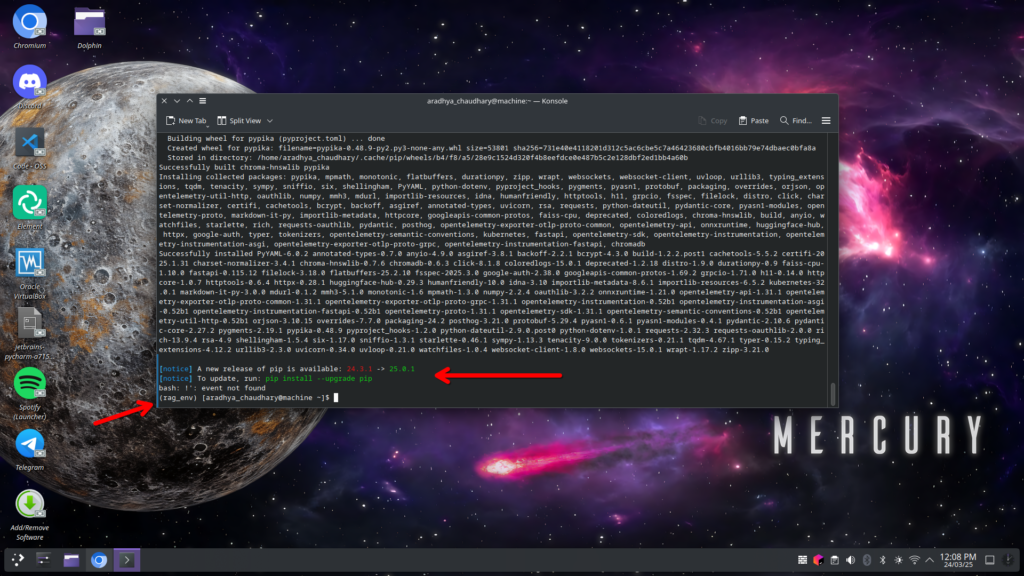

Once your all the dependencies will be download it may look like :

Now, everytime you work with RAG automation , you may activate virtual environment with :

source rag_env/bin/activatefor deactivating use :

deactivateSETTING LARGE LANGUAGE MODELS via CLI

Install Open AI model via this command :

pip install openai

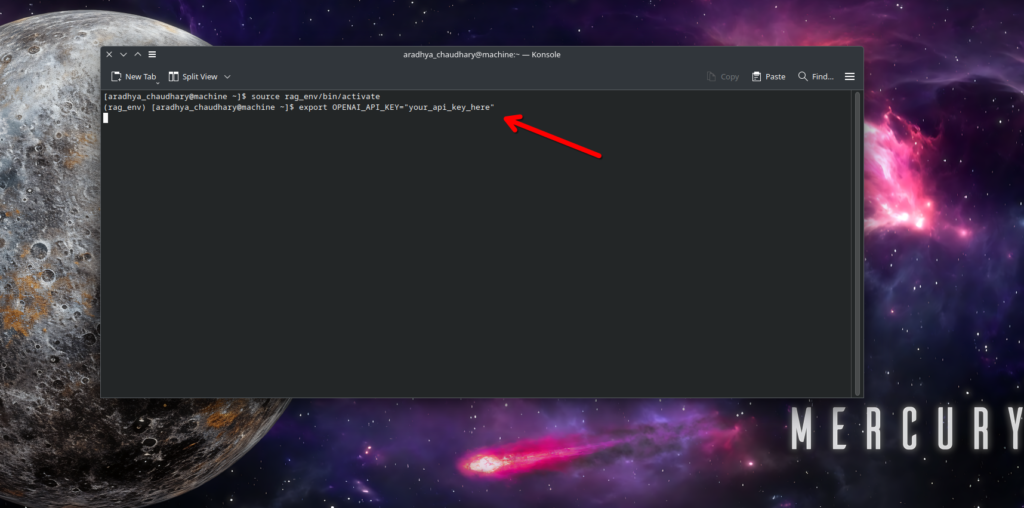

Export your API key securely :

Verify your LLM installation via this command :

python -c "import openai; print('OpenAI API Ready')"

llama-cli --help # Check if llama-cli is installed properlyIt will give a output as “OpenAI Ready for use “

Running Vector Database Services via Bash

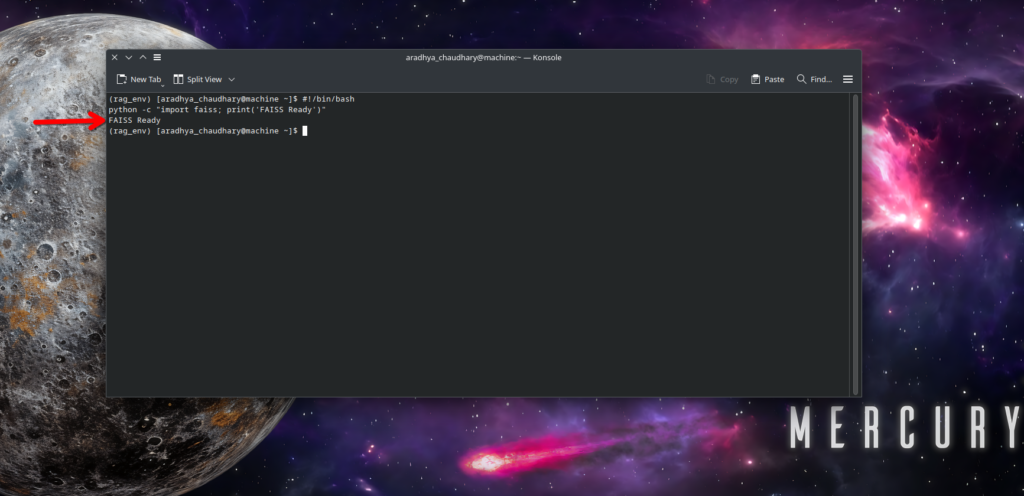

Option 1 : Running FIASS from a bash script

#!/bin/bash

python -c "import faiss; print('FAISS Ready')"

Save this as run_faiss.sh and execute it :

chmod +x run_faiss.sh

./run_faiss.shOption 2 : Running ChromaDB as a Background Service

Run the following command to run ChromaDB :

chromadb run --host 0.0.0.0 --port 8000 &Option 3 : Creating and Managing Data Pipelines with Bash

A complete RAG workflow includes :

- Data Collection

- Embedding Creation

- Retrieval and Augmentation

- Text Generation

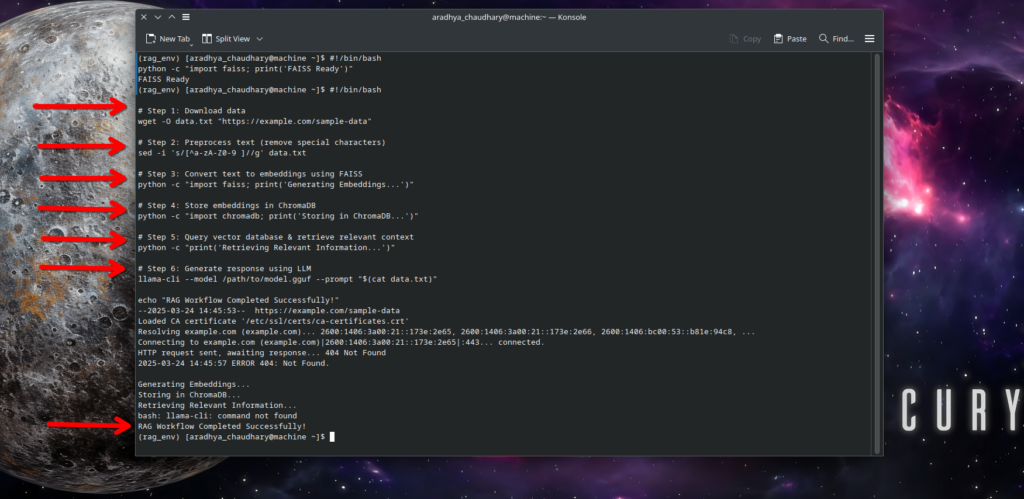

Bash Script to Automate a Simple RAG Pipeline

#!/bin/bash

# Step 1: Download data

wget -O data.txt "https://example.com/sample-data"

# Step 2: Preprocess text (remove special characters)

sed -i 's/[^a-zA-Z0-9 ]//g' data.txt

# Step 3: Convert text to embeddings using FAISS

python -c "import faiss; print('Generating Embeddings...')"

# Step 4: Store embeddings in ChromaDB

python -c "import chromadb; print('Storing in ChromaDB...')"

# Step 5: Query vector database & retrieve relevant context

python -c "print('Retrieving Relevant Information...')"

# Step 6: Generate response using LLM

llama-cli --model /path/to/model.gguf --prompt "$(cat data.txt)"

echo "RAG Workflow Completed Successfully!"

Now run the following script :

chmod +x rag_pipeline.sh

./rag_pipeline.shAUTOMATING DATA PREPROCESSING WITH BASH

Web Scraping & Data Collection

Web scraping can allow us to fetch any data from online sources. We can use various commands for it…lets look into some of them :

Example 1 : Downloading a webpage with < wget >

wget -O article.html "https://example.com/article"This saves the webpage as article.html

Example 2 : Extracting Text Content with sed & grep

cat article.html | sed -n '/<p>/,/<\/p>/p' | sed 's/<[^>]*>//g' > content.txtHere <p> means paragraph, the above command extracts all the paragraphs from the source of web you provided.

Example 3 : Using < curl > and < pup > to extract data from HTML

First install pup from the given command :

sudo pacman -S pupNow, extract the article text :

curl -s "https://example.com/article" | pup 'p text{}' > content.txtReplace https://example.com/article with the article link from which you want to fetch certain data.

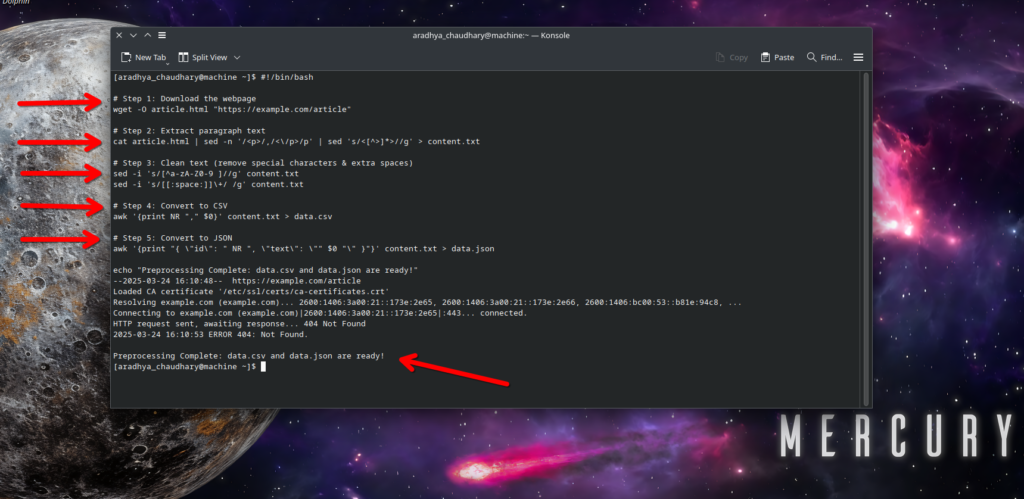

Automated Bash Script for Preprocessing

#!/bin/bash

# Step 1: Download the webpage

wget -O article.html "https://example.com/article"

# Step 2: Extract paragraph text

cat article.html | sed -n '/<p>/,/<\/p>/p' | sed 's/<[^>]*>//g' > content.txt

# Step 3: Clean text (remove special characters & extra spaces)

sed -i 's/[^a-zA-Z0-9 ]//g' content.txt

sed -i 's/[[:space:]]\+/ /g' content.txt

# Step 4: Convert to CSV

awk '{print NR "," $0}' content.txt > data.csv

# Step 5: Convert to JSON

awk '{print "{ \"id\": " NR ", \"text\": \"" $0 "\" }"}' content.txt > data.json

echo "Preprocessing Complete: data.csv and data.json are ready!"

Now run the script via :

chmod +x preprocess.sh

./preprocess.shAutomating Document Embedding & Vectorization

After the preprocessing of data, the next step in RAG system is to convert text to embeddings ( numerical vector representations ). We will cover the following things in this section :

- Running an embedding model with bash

- Storing and retrieving data in a vector database

- Managing vector indexing or faster retrievals

NOTE : If you are looking for specific embedding other than text then visit this LINK

Automating Embedding Model Execution

For conversion of text to embedding we need a text conversion model, for this we have two options :

- OpenAI’s text-embedding-ada-002 ( Cloud Based API )

- Hugging Face Transformers ( Example – sentence transformers )

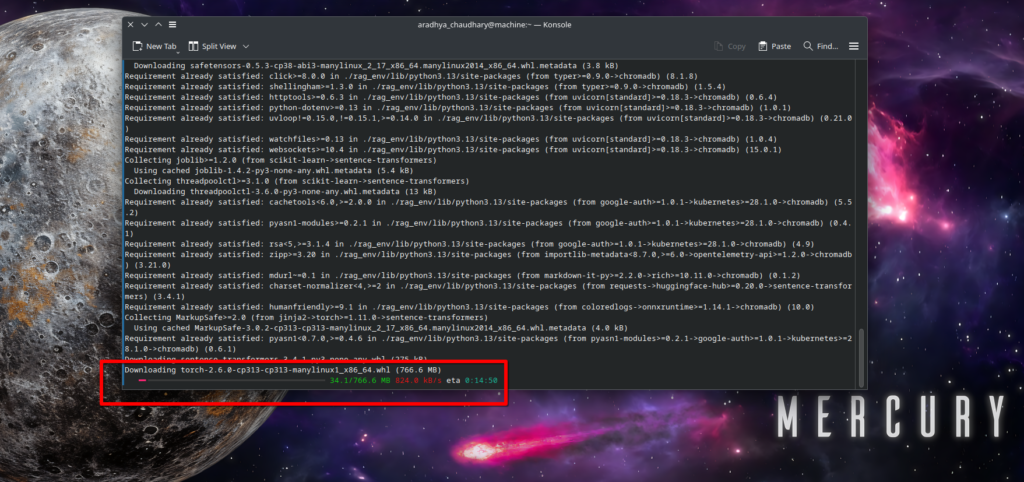

Setting Dependencies in Virtual Environment

python -m venv rag_env

source rag_env/bin/activate

pip install sentence-transformers faiss-cpu chromadb openaiCopy and paste the above commands in terminal :

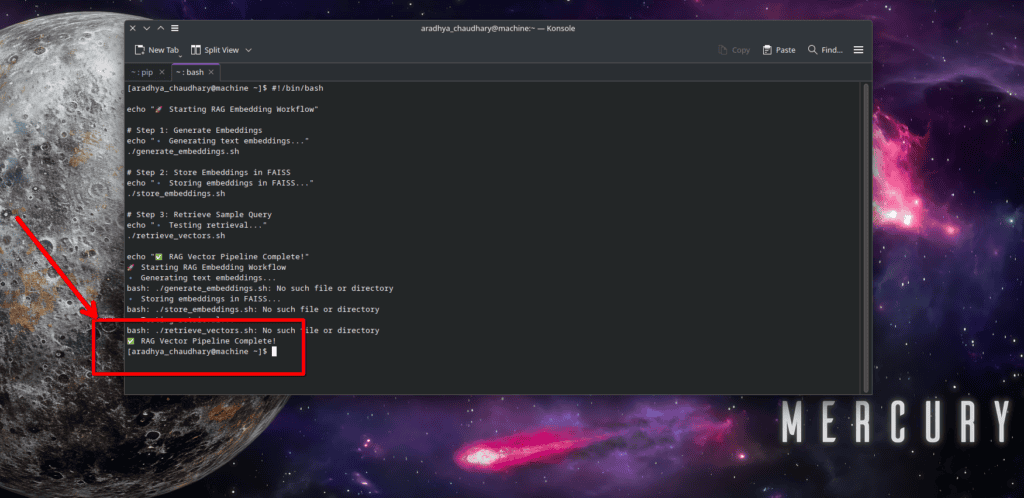

Automating the entire workflow

It is better to do tasks in a single run, so for that we will run a master script to pacify our work :

#!/bin/bash

echo "Starting RAG Embedding Workflow"

# Step 1: Generate Embeddings

echo "Generating text embeddings..."

./generate_embeddings.sh

# Step 2: Store Embeddings in FAISS

echo "Storing embeddings in FAISS..."

./store_embeddings.sh

# Step 3: Retrieve Sample Query

echo "Testing retrieval..."

./retrieve_vectors.sh

echo "✅ RAG Vector Pipeline Complete!"

you may understand the whole process with each domains by the following table :

| STEP | TOOL USED | COMMANDS |

| Generate embeddings | sentence-transformers | ./generate_embeddings.sh |

| Store embeddings in FAISS | FAISS | ./store_embeddings.sh |

| Retrieve similar texts | FAISS | ./retrieve_vectors.sh |

| Optimize FAISS index | FAISS ( IVF ) | ./store_optimized_embeddings.sh |

| Automate everything | Bash Script | ./run_rag_pipeline.sh |

AUTOMATING QUERY PROCESSING in RAG

Fetching Queries from Users via CLI

#!/bin/bash

echo "🔍 Enter your query:"

read user_query

# Save the query to a temporary file

echo "$user_query" > query.txt

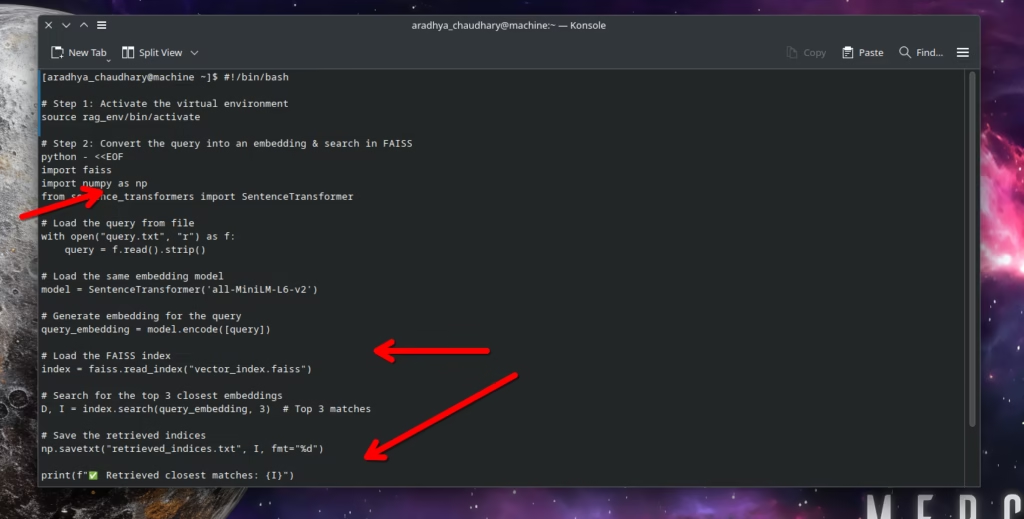

Automating Search in the Vector Database via CLI

- Converted into an embedding using the same model used during document embedding

- Searched in the FAISS vector database to retrieve the closest matches

#!/bin/bash

# Step 1: Activate the virtual environment

source rag_env/bin/activate

# Step 2: Convert the query into an embedding & search in FAISS

python - <<EOF

import faiss

import numpy as np

from sentence_transformers import SentenceTransformer

# Load the query from file

with open("query.txt", "r") as f:

query = f.read().strip()

# Load the same embedding model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Generate embedding for the query

query_embedding = model.encode([query])

# Load the FAISS index

index = faiss.read_index("vector_index.faiss")

# Search for the top 3 closest embeddings

D, I = index.search(query_embedding, 3) # Top 3 matches

# Save the retrieved indices

np.savetxt("retrieved_indices.txt", I, fmt="%d")

print(f"Retrieved closest matches: {I}")

EOF

Fetching Relevant Context for Generation

- Extract the original text corresponding to those document indices, we need to…

- Format the text for use in an LLM

Here is the code snippet :

#!/bin/bash

# Step 1: Activate the virtual environment

source rag_env/bin/activate

# Step 2: Extract relevant text using retrieved indices

python - <<EOF

import json

import numpy as np

# Load the retrieved indices

indices = np.loadtxt("retrieved_indices.txt", dtype=int)

# Load the dataset (text database)

with open("data.json", "r") as f:

docs = [json.loads(line)['text'] for line in f.readlines()]

# Extract the top matching documents

retrieved_docs = [docs[i] for i in indices if i < len(docs)]

# Save the retrieved contexts to a file

with open("retrieved_context.txt", "w") as f:

for doc in retrieved_docs:

f.write(doc + "\n\n")

print("Retrieved context saved in retrieved_context.txt")

EOF

In my case there is an error because there are no files created in my directory but in your ase, give it a try for sure. Just follow the given steps.

AUTOMATING TEXT-GENERATION & RESPONSE HANDLING

Automating Model Calls for GPT API & LIama

There are two ways to integrate LLM into our pipeline :

- Locally hosted models ( Llama.cpp, Ollama, or Hugging Face Transformers )

- Cloud Based API’s ( OpenAI GPT, TogetherAI, Anyscale )

A. Calling GPT4 API via BASH

For GPT models we can use < curl > to fetch API directly :

NOTE : The below script is for experimental purpose only, you can modify it according to your use and convenience.

#!/bin/bash

# Load the OpenAI API Key (set it in your environment variables)

API_KEY="your_openai_api_key"

# Read the retrieved context

context=$(cat retrieved_context.txt)

# Define the user query

query=$(cat query.txt)

# Send request to OpenAI API

response=$(curl -s -X POST "https://api.openai.com/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "gpt-4",

"messages": [

{"role": "system", "content": "You are an AI assistant that answers questions using provided context."},

{"role": "user", "content": "Context: '"$context"' \n\n Question: '"$query"'"}

]

}' | jq -r '.choices[0].message.content')

# Save response to a file

echo "$response" > generated_response.txt

echo "Response saved in generated_response.txt"

In the above script replace “Your_Openai_api_key” with your API key. This script will create a file called “generate_gpt_response.sh”.

Execute the created and saved file with following script :

chmod +x generate_gpt_response.sh

./generate_gpt_response.shB. Running a Local Llama Model

Llama.cpp or Ollama are used for offline usage.

Example: Calling Llama.cpp Model :

#!/bin/bash

# Read the retrieved context and query

context=$(cat retrieved_context.txt)

query=$(cat query.txt)

# Call Llama.cpp model with context & query

./llama.cpp -m models/llama-7B.gguf -p "Context: $context \n\n Question: $query" > generated_response.txt

echo "Response saved in generated_response.txt"

Run the script with :

chmod +x generate_llama_response.sh

./generate_llama_response.shPost-Processing Responses for Better Output

LLM responses might contain :

- unwanted extra details

- repetitive content

- lack of structured formatting

To clean and format responses, we can use sed, awk, and grep in Bash :

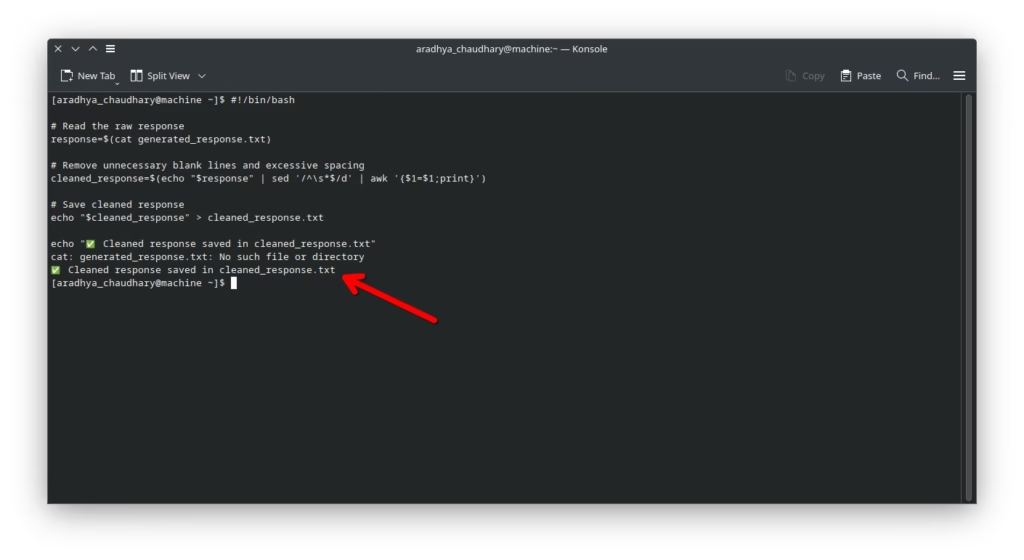

#!/bin/bash

# Read the raw response

response=$(cat generated_response.txt)

# Remove unnecessary blank lines and excessive spacing

cleaned_response=$(echo "$response" | sed '/^\s*$/d' | awk '{$1=$1;print}')

# Save cleaned response

echo "$cleaned_response" > cleaned_response.txt

echo " Cleaned response saved in cleaned_response.txt"

Formatting and Saving Responses for Further Use

A. Formatting Response into Markdown

You can use the following experimental script :

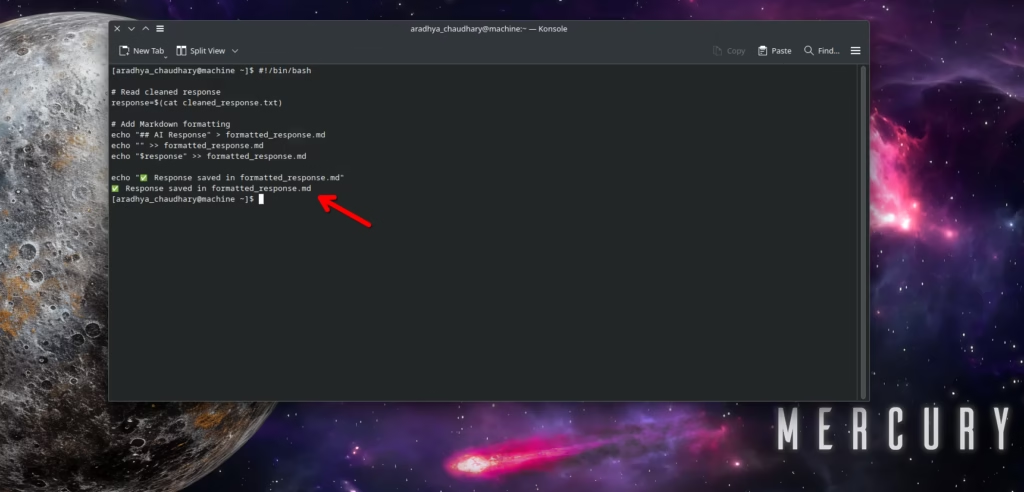

#!/bin/bash

# Read cleaned response

response=$(cat cleaned_response.txt)

# Add Markdown formatting

echo "## AI Response" > formatted_response.md

echo "" >> formatted_response.md

echo "$response" >> formatted_response.md

echo "Response saved in formatted_response.md"

Run the script for markdown :

chmod +x format_response.sh

./format_response.shB. Formatting Response into JSON

Experimental snippet for formatting :

#!/bin/bash

# Read cleaned response

response=$(cat cleaned_response.txt)

# Convert response into JSON format

echo "{\"response\": \"$response\"}" > formatted_response.json

echo "✅ Response saved in formatted_response.json"For running the script :

chmod +x format_response_json.sh

./format_response_json.shAutomating the Entire Text Generation Pipeline via CLI

You can use the following format in pipeline for text generation :

#!/bin/bash

echo "Running RAG-based AI Response Generation"

# Step 1: Fetch User Query

echo "Fetching user query..."

./query_pipeline.sh

# Step 2: Generate AI Response

echo "Calling LLM to generate response..."

./generate_gpt_response.sh # Or use `./generate_llama_response.sh` for local models

# Step 3: Post-process the response

echo "Cleaning and formatting response..."

./post_process_response.sh

# Step 4: Format response for further use

echo "Saving formatted response..."

./format_response.sh

echo "RAG-based AI Response Generation Complete!"

For running the pipeline :

chmod +x generate_full_response.sh

./generate_full_response.shREAL WORLD USE CASES & APPLICATIONS

Automating Research & Knowledge Retrieval

USE CASE :

Academic researchers, teachers. professors, universities and professionals need quick access to relevant information from large text datasets, PDFs and scientific papers.

How RAG + CLI Helps:

- By automatically searching millions of documents using vector databases.

- Retrieve highly relevant context without manually browsing papers.

- Generate concise summaries using AI models like GPT/ Llama

Example CLI Pipeline :

./query_pipeline.sh # Fetch & process query

./generate_gpt_response.sh # Generate AI-based summary

cat cleaned_response.txt # View the final answerPotential Enhancements :

- Automate data ingestion from google scholar, ArXiv, or company databases

- Integrate with speech-to-text for voice-based queries

Automating Customer Support Chatbots

USE CASE :

Companies or call centers often deal with repetitive customer queries, for this a chatbot can automate support tasks :

How RAG + CLI Helps :

- Uses existing FAQ documents as a knowledge base

- Provides instant AI-generated responses

- Can be deployed in Slack, Telegram, or a CLI chatbot

Example Chatbot Script :

#!/bin/bash

while true; do

echo "💬 Enter your query (or type 'exit' to quit):"

read user_query

if [ "$user_query" == "exit" ]; then

echo "👋 Goodbye!"

break

fi

echo "$user_query" > query.txt

./generate_full_response.sh

cat formatted_response.md

donePotential Enhancements :

- Deploy as an API backend for websites

- Use speech-to-text for voice-based support

Automating Internal Documentation Search for Companies

USE CASE :

Companies need quick search functionality across large internal documentaries like Wikis, PDFs and Confluence.

How RAG + CLI Helps :

- Employees can search company knowledge bases from the terminal

- Automatically extracts relevant policies, code snippets, or SOPs

- Improves developer experience by enabling quick access to logs & troubleshooting docs

Example CLI Search Script :

./query_pipeline.sh # Fetch query & retrieve docs

./generate_gpt_response.sh # Generate AI-assisted answer

cat formatted_response.md # View the responsePotential Enhancements :

- Index company wiki pages, PDFs, and GitHub repos

- Deploy as a CLI-based internal assistant

CONCLUSION

Summary of What We Learnt Till Now :

- A fully CLI-Based RAG Pipeline that automates information retrieval

- Integrated vector databases, FAISS based working, Model integration with the blog spot working mechanism

- How we made directories in sample shuffling

- Automating CLI based RAG working integration with all kinds of functionalities.

- Working offline with LLMs like Llama and GPT-4

- Running local LLMs like Llama and GPT -4

- Automating Text Generation and document embedding

Additional Resources and Learning Paths for Referencing :

BONUS :

If you are new to LLMs and want to know more, consider visiting out other articles :

Frequently Asked Questions (FAQ’s) :

What is Retrieval-Augmented Generation (RAG)?

RAG is a process that enhances Large Language Models (LLMs) by integrating external knowledge during response generation. It retrieves relevant documents from a knowledge base (e.g., FAISS, ChromaDB, or APIs) and uses them as context for generating enriched answers.

Why should I automate RAG workflows?

Automating RAG workflows reduces manual effort, increases speed and scalability, and ensures consistency. Tasks like data preprocessing, embeddings generation, retrieval, API calls, and response formatting are streamlined for efficiency.

What are the prerequisites for setting up a RAG system?

Basics of Bash scripting (loops, variables, exception handling).

Tools like wget, curl, jq, Python, and vector databases (FAISS, ChromaDB).

An understanding of RAG concepts such as retrieval, augmentation, and generation.

What are the advantages of using Bash scripting for automation?

Lightweight and efficient.

Easy integration with other CLI tools.

Supports scheduling and background execution with ease.

Fully customizable and secure.

How can I set up FAISS on my system?

Use the following command:

pip install faiss-cpu

pip install faiss-gpu # If GPU acceleration is available

Which tools are required to integrate LLMs in the RAG pipeline?

OpenAI GPT or a locally hosted LLM like LLaMA.

Dependencies like sentence-transformers, FAISS, ChromaDB, and APIs like OpenAI.

Can I use RAG offline?

Yes, you can use offline LLMs like LLaMA for local RAG setups. Install LLaMA and its dependencies locally and interact with them using CLI tools.