Welcome techies !! In this entire article we will be bridging the gap between Agentic AI and Big Data Processing by integrating autonomous AI Agents with PySpark which is a powerful distributed computing framework. Along this guide you will learn how to setup a scalable AI-Powered data pipeline where rigorous agents can ingest, analyze and make decisions on massive datasets in real time.

As we make you walkthrough, you will learn about configuring PySpark, deploying AI-Driven decision making agents and optimizing performance for both Linux ( KDE x11 – Endeavour OS and Windows 10/11 ) users.

1. INTRODUCTION

What is Agentic AI ?

AI Agents or Agentic AI are similar phrases, they refer to autonomous AI systems that can make decisions, execute tasks, adapt dynamically based on real world data. Unlike traditional AI models which rely on predefined inputs and outputs, Agentic AI interacts with external environments, self improves by using a iterative pipeline and optimizes its decision making process over time.

Role of Big Data in AI Decision Making ?

Big Data plays a crucial role in training and improving Agentic AI models by providing :

- Large scale datasets for learning patterns and behaviours

- Real-Time information to adapt to changing scenarios

- Scalable processing to handle complex AI workflows

When we efficiently process massive datasets which requires distributed computing and requires PySpark to be utilized.

Why Integrate Agentic AI with PySpark ?

PySpark is an open source Big Data framework that enables parallel processing across clusters, making it a perfect choice for handling large scale AI workloads.

By integrating PySpark with Agentic AI you can do the following :

- Process massive datasets efficiently without bottlenecks.

- Enable real time AI driven decision making at scale.

- Optimize AI model performance by leveraging distributed computing.

This combination empowers AI systems to learn, adapt and act autonomously,

Key Challenges and Research Guide

This guide is designed for :

- AI Engineers & Data Engineers looking to implement Agentic AI at scale

- Big Data professionals who want to enhance AI workflows with PySpark

- Developers on linux ( KDE x11 – Endeavour OS ) and Windows 10/11 who need a cross platform integration

whether you are on Arch Linux or Windows 11, this guide provides a cross platform step by step implementation.

2. PREREQUISITES & SETUP

For Linux ( KDE x11 – Endeavour OS )

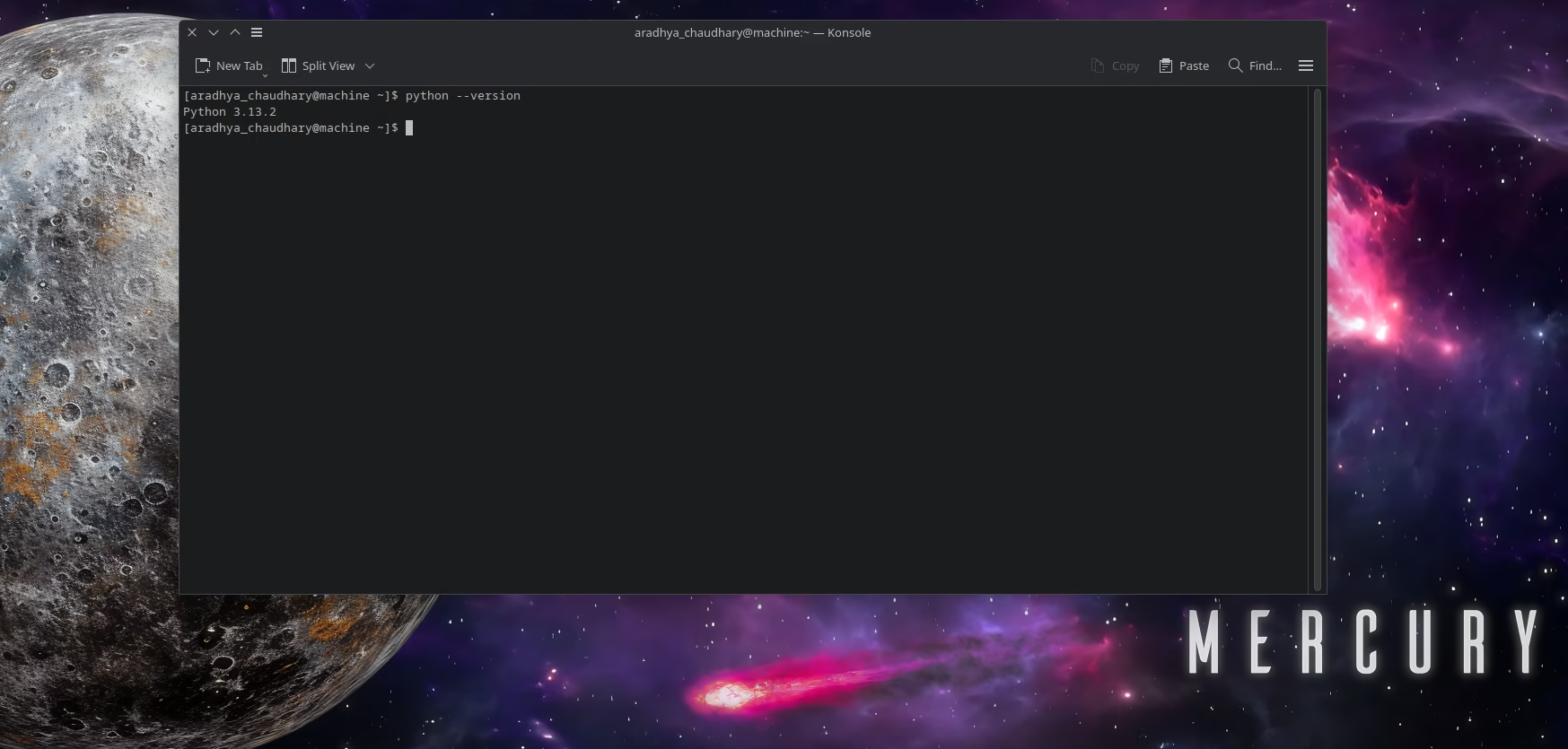

Installing Python & PIP : PySpark requires Python 3.7 or later. Check your Python version

python --version

If Python is not installed, install it using pacman:

sudo pacman -S pythonNOTE : In my case it is installed so I am not showing the practical to install. Kindly refer the above given command.

Next, install pip, the Python package manager:

sudo pacman -S python-pip

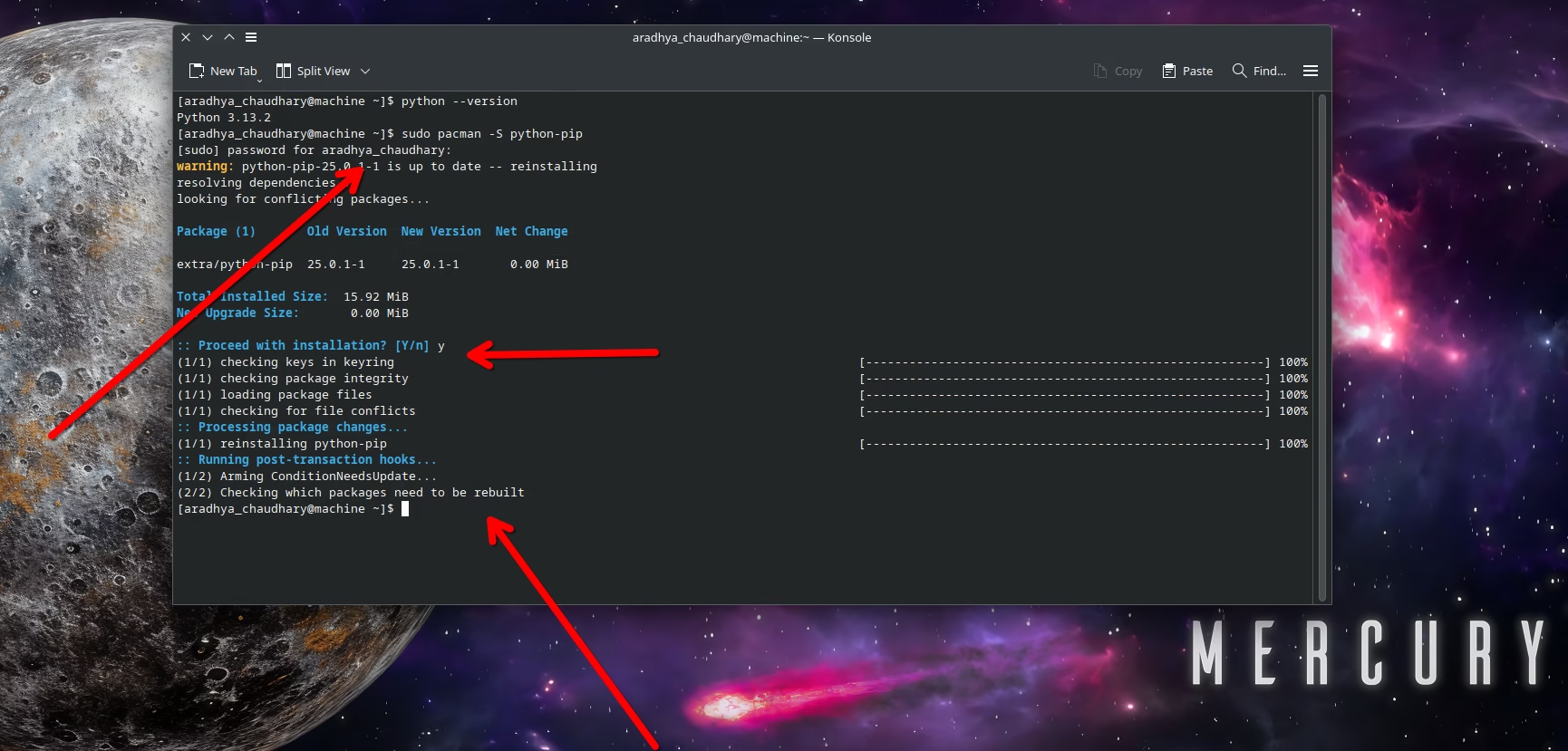

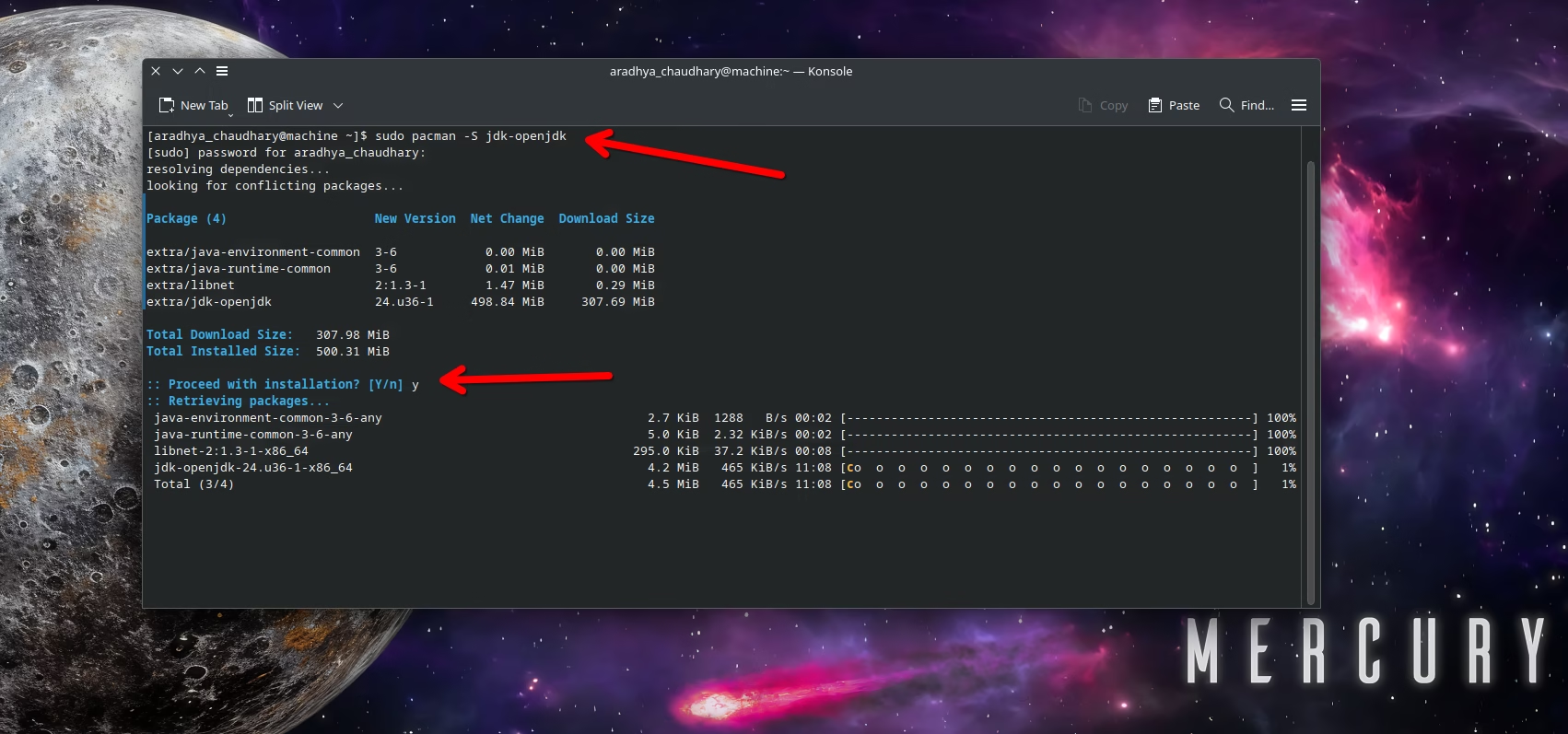

Setting Up Java & Spark

java -versionIf java will not be installed in your system it will show this command :

Now install java using this command :

sudo pacman -S jdk-openjdk

Next, install Apache Spark:

sudo pacman -S apache-sparkVerify installation of Apache Spark using :

spark-shellConfiguring PySpark for Local and Cluster Use

To configure PySpark, add the following environment variables to your ~/.bashrc or ~/.zshrc file:

echo 'export SPARK_HOME=/usr/lib/spark' >> ~/.bashrc

echo 'export PATH=$SPARK_HOME/bin:$PATH' >> ~/.bashrc

echo 'export PYSPARK_PYTHON=python3' >> ~/.bashrc

source ~/.bashrcNOTE : If the above method doesnt work for downloading Apache Spark for you VISIT this LINK

3. Understanding Agentic AI & PySpark Integration

If we want to efficiently integrate Agentic AI with PySpark, we should have knowledge about how autonomous agents work in real time, how PySpark processes Big Data and how these two components can communicate for seamless AI-driven decision-making.

Overview of Autonomous AI Agents

Agentic AI refers to a AI systems capable of autonomous decision making, reasoning, executions and learning about constant human intervention. These agents can perceive the following :

- ✅ Perceive their environment (structured/unstructured data)

- ✅ Process large-scale data for insights

- ✅ Make decisions and take actions based on real-time information

- ✅ Continuously learn and adapt over time

Here are some listed examples of Autonomous AI system :

- AI Powered data analysts that extract insights from real time logs

- Chatbots and virtual assistants that retrieve and generate responses dynamically.

- Automated research agents that collect, summarize, and interpret information.

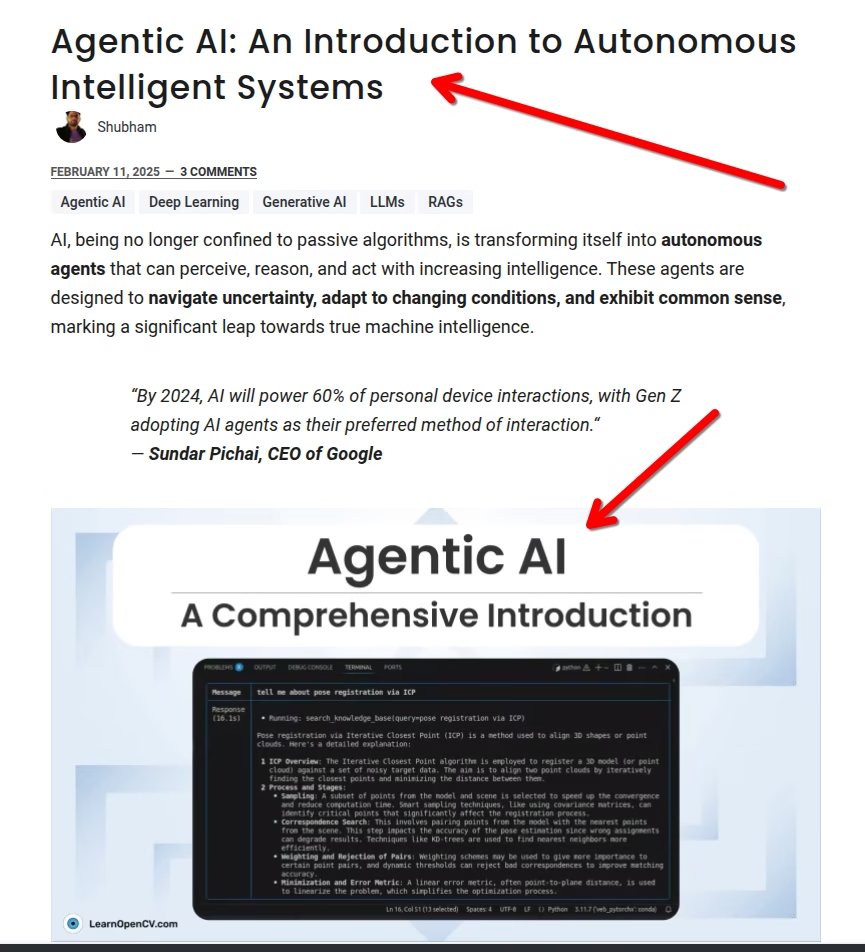

For visualising AI tutorials you may visit the OpenCV website : [ LINK ]

How PySpark Handles Large-Scale Data Processing

PySpark is the Python API for Apache Spark, it is a distributed computing framework built to process massive datasets in parallel. It offers the following :

- 🚀 Distributed processing across multiple nodes

- 🚀 In-memory computation for faster data handling

- 🚀 Support for structured & unstructured data

- 🚀 Real-time data streaming for dynamic AI applications

PySpark achieves this through Resilient Distributed Dataset or ( RDD ) model and dataframe API, allowing it for high speed computations on large scale datasets.

For Agentic AI, PySPark enables the following :

- Processing unstructured text, images, and logs for AI models

- Handling high-volume, real-time data streams for adaptive AI agents

- Scaling AI workloads across distributed clusters

4. Communication Mechanisms Between AI Agents and Spark

For Agentic AI to work effectively with PySpark, we need seamless communication between AI models, decision engines, and big data processing workflows. This integration is typically achieved through:

PySpark User- Defined- Functions ( UDF’s )

- AI models like Chat GPT, Llama can be wrapped inside PySpark UDF

- It enables distributed execution of AI models within Spark

External API Calls

- AI Agents can communicate via Spark with REST API’s

- It allows Spark to send data for AI Analysts and receive decisions in real time

Vector Databases & Embeddings

- AI Agents can store and retrieve vectorized data using PySpark

- It enables context-aware-AI processing for tasks like Retrieval Augmented Generation ( RAG )

Kafka and Spark Streaming

- Enables real time data ingestions from Logs, IOT devices and user inputs

- AI Agents can process incoming data dynamically and provide instant responses.

SOME REFERENCE LINKS :

- Agentic RAG: Step-by-Step Tutorial With Demo Project [ VISIT LINK ]

- Step By Step installation of Spark on Windows, Linux and macOS [ VISIT LINK ]

- PySpark Installation Guide: Setting Up Your Big Data Environment [ VISIT LINK ]

5. Setting Up a PySpark-Based Data Pipeline for Agentic AI

PySpark based pipeline enables Agentic AI to process unstructured and structured data efficiently, This includes loading data, preprocessing it for AI models, and choosing the right distributed storage solutions for scalability.

NOTE : I will provide you the universal code that you can use with any dataset and make a working pipeline in seconds :

- The dataset should be structured and unstructured.

Loading Structured Data in PySpark

from pyspark.sql import SparkSession

# Initialize Spark Session

spark = SparkSession.builder.appName("AgenticAI-Pipeline").getOrCreate()

# Load CSV File

df_csv = spark.read.option("header", True).csv("data/sample_data.csv")

# Load JSON File

df_json = spark.read.json("data/sample_data.json")

# Load Parquet File

df_parquet = spark.read.parquet("data/sample_data.parquet")

df_csv.show()Loading Unstructured Data in PySpark

we can use textFile() or wholeTextFiles() for storing unstructured data.

rdd_text = spark.sparkContext.textFile("data/sample_logs.txt")

rdd_text.take(5) # Preview first 5 linesLoading Data from SQL Databases

df_sql = spark.read \

.format("jdbc") \

.option("url", "jdbc:mysql://localhost:3306/mydb") \

.option("driver", "com.mysql.jdbc.Driver") \

.option("dbtable", "my_table") \

.option("user", "username") \

.option("password", "password") \

.load()

df_sql.show()Preprocessing Data for AI Models

Once the data has been loaded, we need to transform and preprocess it to make it usable for AI Models.

We will be doing basic data cleaning ( Handling missing values, doing simple imputation tasks )

# Drop missing values

df_cleaned = df_csv.dropna()

# Remove duplicates

df_cleaned = df_cleaned.dropDuplicates()

# Filter out irrelevant data

df_filtered = df_cleaned.filter(df_cleaned["column_name"] != "irrelevant_value")Tokenizing and Processing Text Data for NLP

from pyspark.ml.feature import Tokenizer

tokenizer = Tokenizer(inputCol="text_column", outputCol="words")

df_tokenized = tokenizer.transform(df_cleaned)

df_tokenized.show()Vectorizing Data for AI Models (TF-IDF, Word2Vec, etc.)

from pyspark.ml.feature import HashingTF, IDF

hashingTF = HashingTF(inputCol="words", outputCol="rawFeatures", numFeatures=20)

featurizedData = hashingTF.transform(df_tokenized)

idf = IDF(inputCol="rawFeatures", outputCol="features")

idfModel = idf.fit(featurizedData)

rescaledData = idfModel.transform(featurizedData)

rescaledData.select("words", "features").show()6. Case Study : Building an Autonomous AI Data Analysis Agent

In this case study, we will build and deploy an autonomous AI data analysis agent that integrates Agentic AI with PySpark. This agent will:

✅ Ingest large-scale data from multiple sources (structured & unstructured)

✅ Preprocess and clean the data for AI models

✅ Perform intelligent analysis and decision-making autonomously

✅ Generate reports and insights without human intervention

Real-World Use Case

Imagine a company dealing with customer feedback across multiple platforms (social media, emails, reviews, chat logs). Manually analyzing this data is inefficient. An Agentic AI-powered data analyst can automate the process, providing real-time insights into customer sentiment, trending issues, and potential improvements.

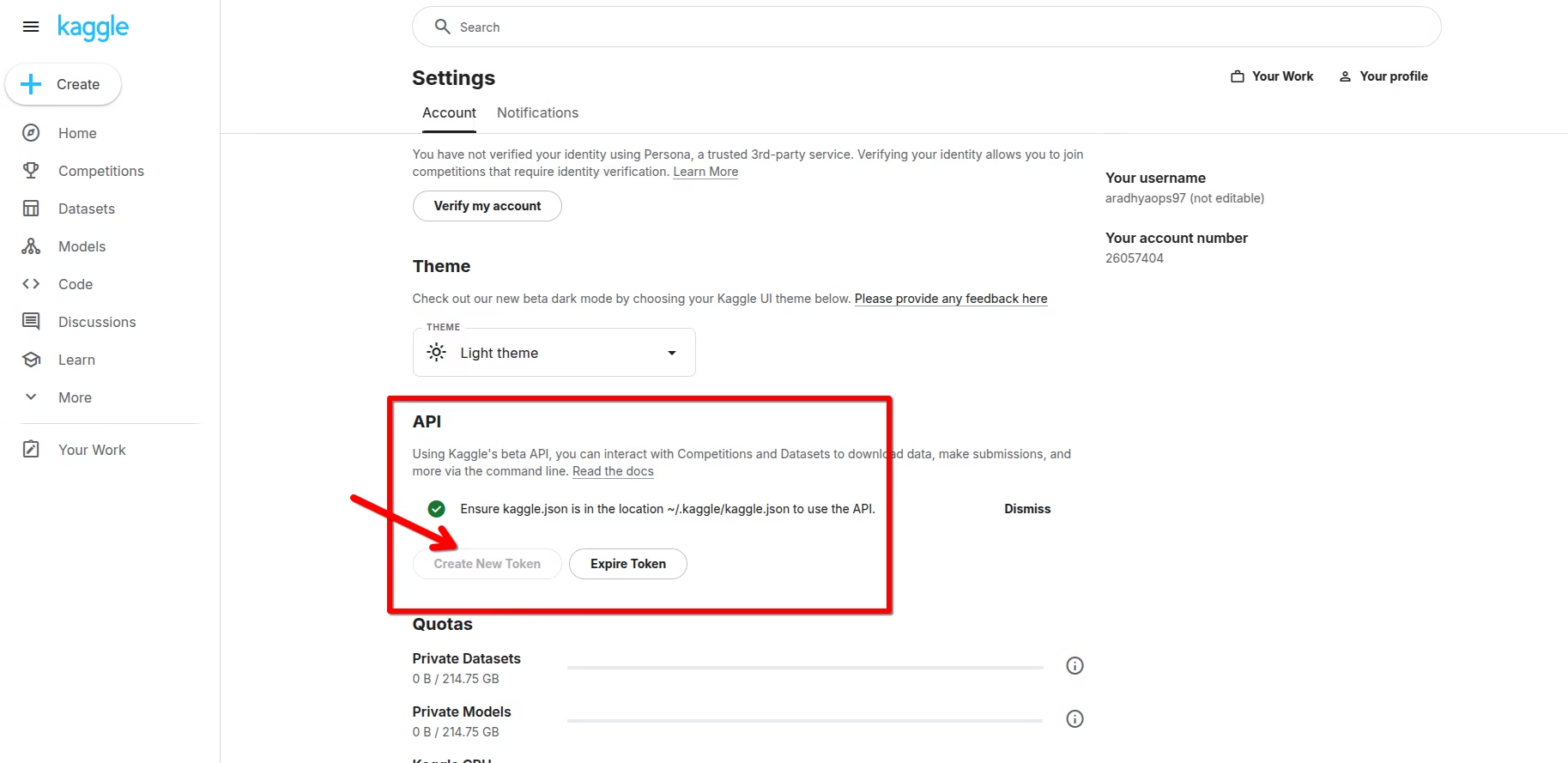

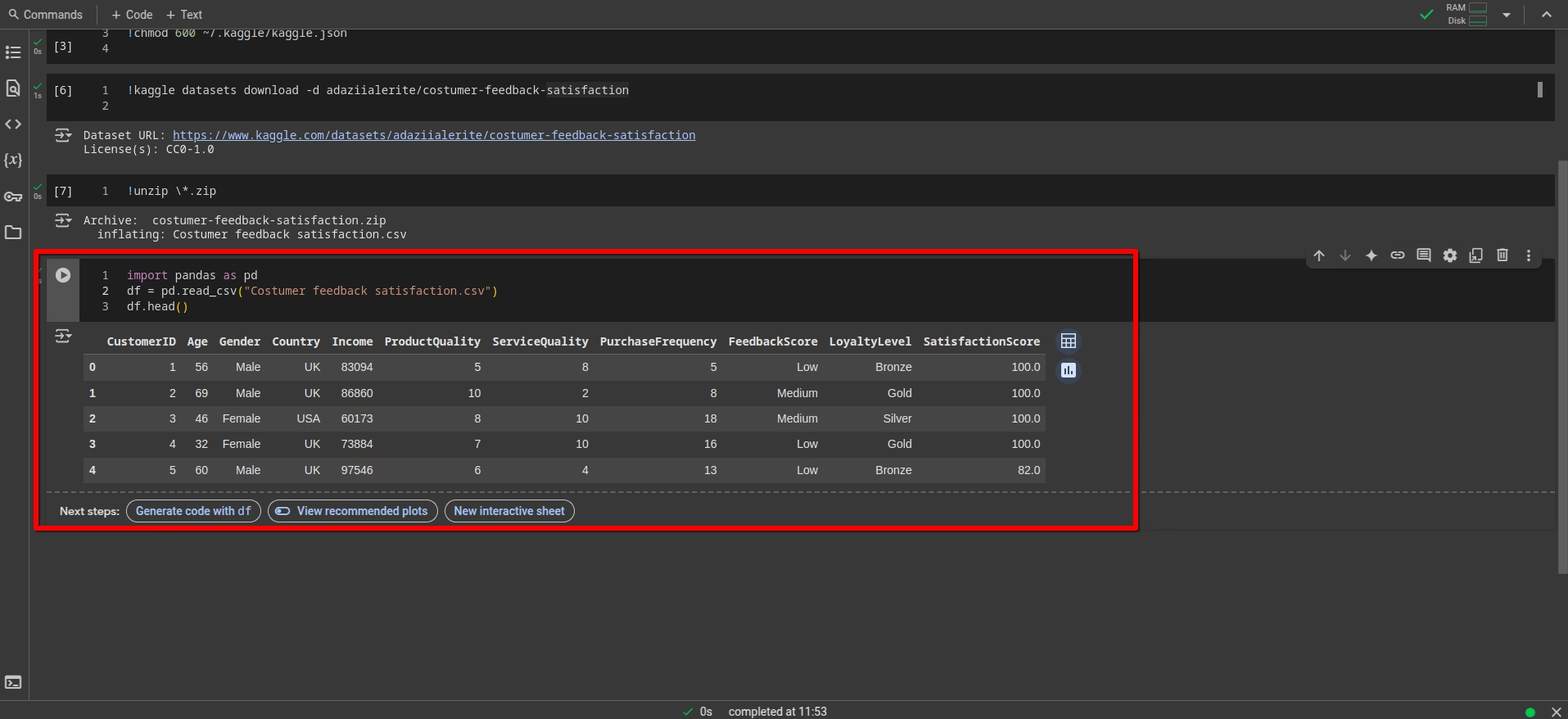

Step 1: Creating API and Fetching Dataset into Google Collab Environment

To load the data you will need a kaggle account : Visit [ kaggle.com ]

Go to > your profile > account > create API Token > kaggle.json ( will be downloaded )

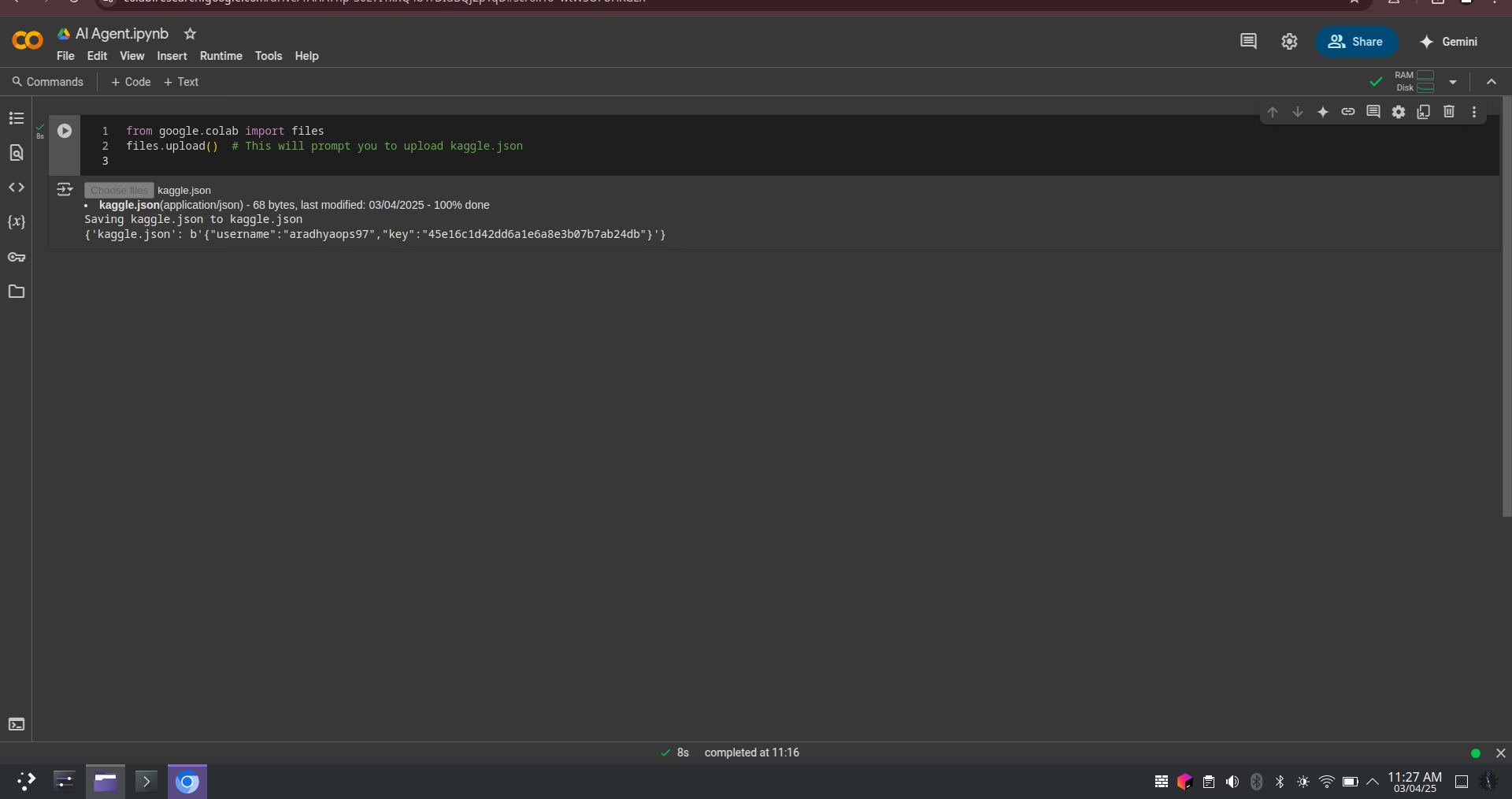

Now visit google collab > Click on ” New Notebook” > Rename your notebook as per your choice > Add a code line

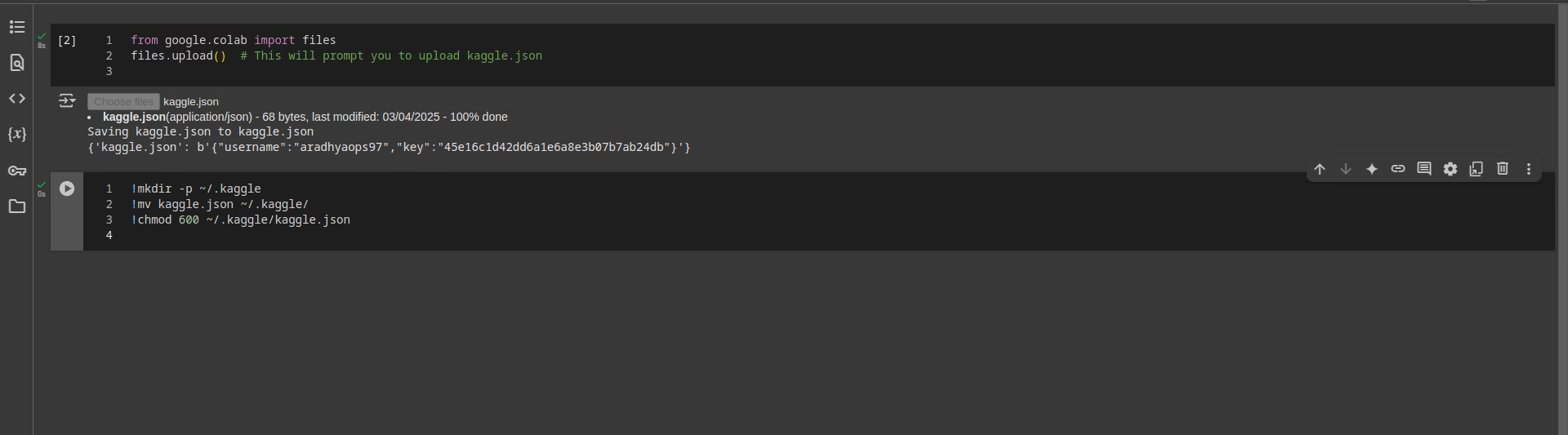

Insert the following code :

from google.colab import files

files.upload() # This will prompt you to upload kaggle.json

This command will prompt you to upload files from your computer >>> Choose the kaggle.json that was previously downloaded.

Insert the following code to place kaggle.json in correct directory :

!mkdir -p ~/.kaggle

!mv kaggle.json ~/.kaggle/

!chmod 600 ~/.kaggle/kaggle.json

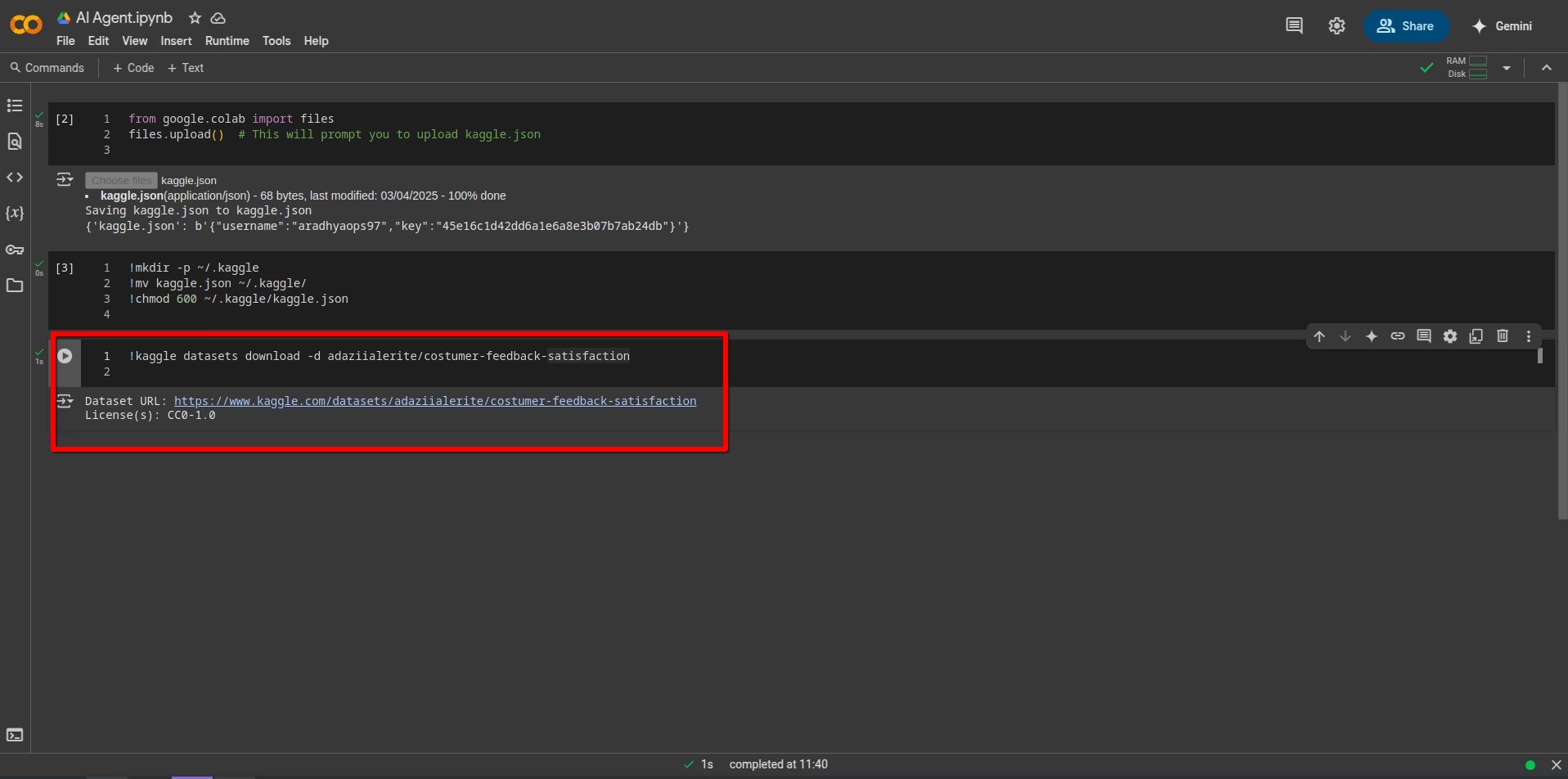

Now if we want to download a specific dataset insert the following command :

!kaggle datasets download -d {path of dataset}

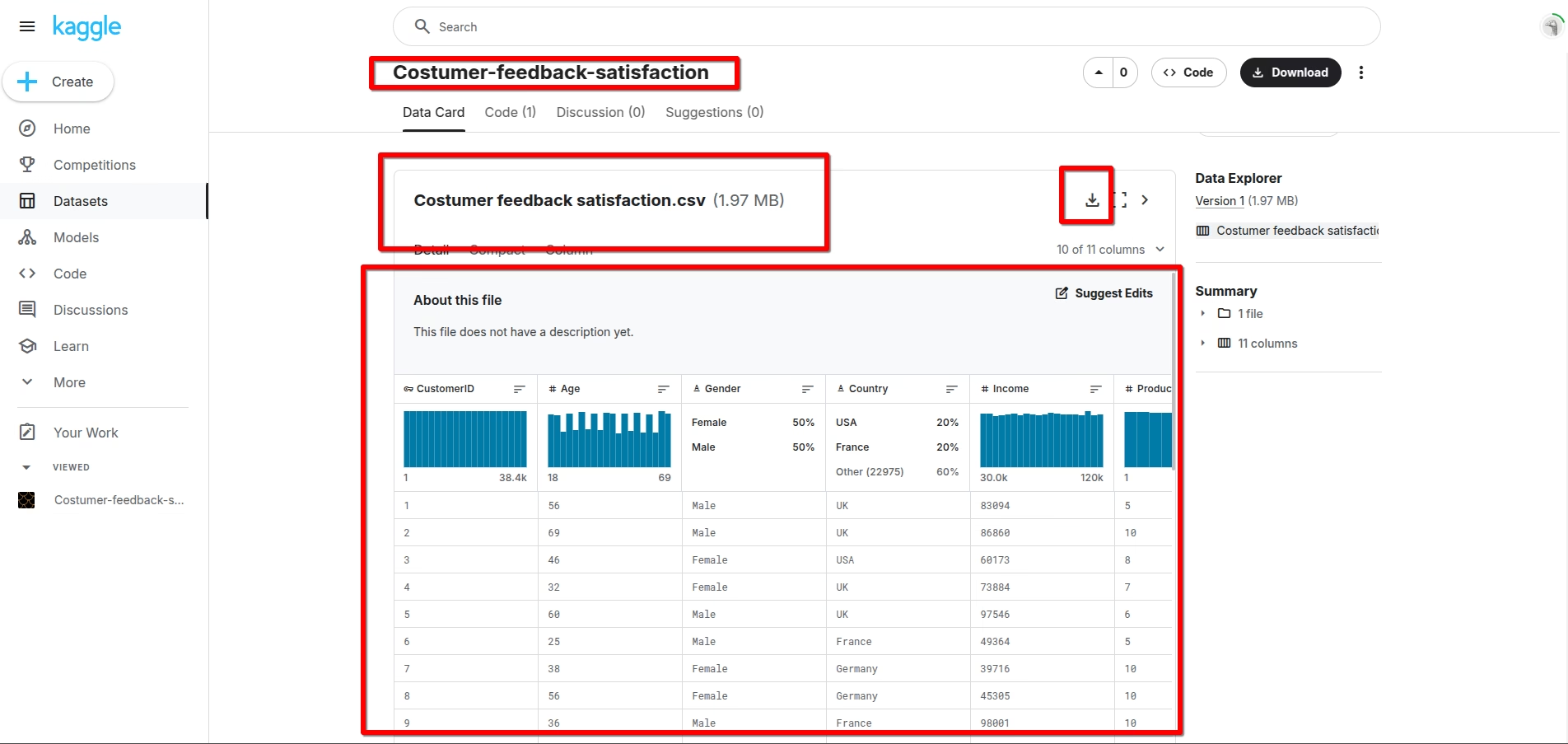

## Insert path in place of "{path of dataset}In my case if I download a customer feedback dataset which is :

So its download command will be :

!kaggle datasets download -d adaziialerite/costumer-feedback-satisfaction

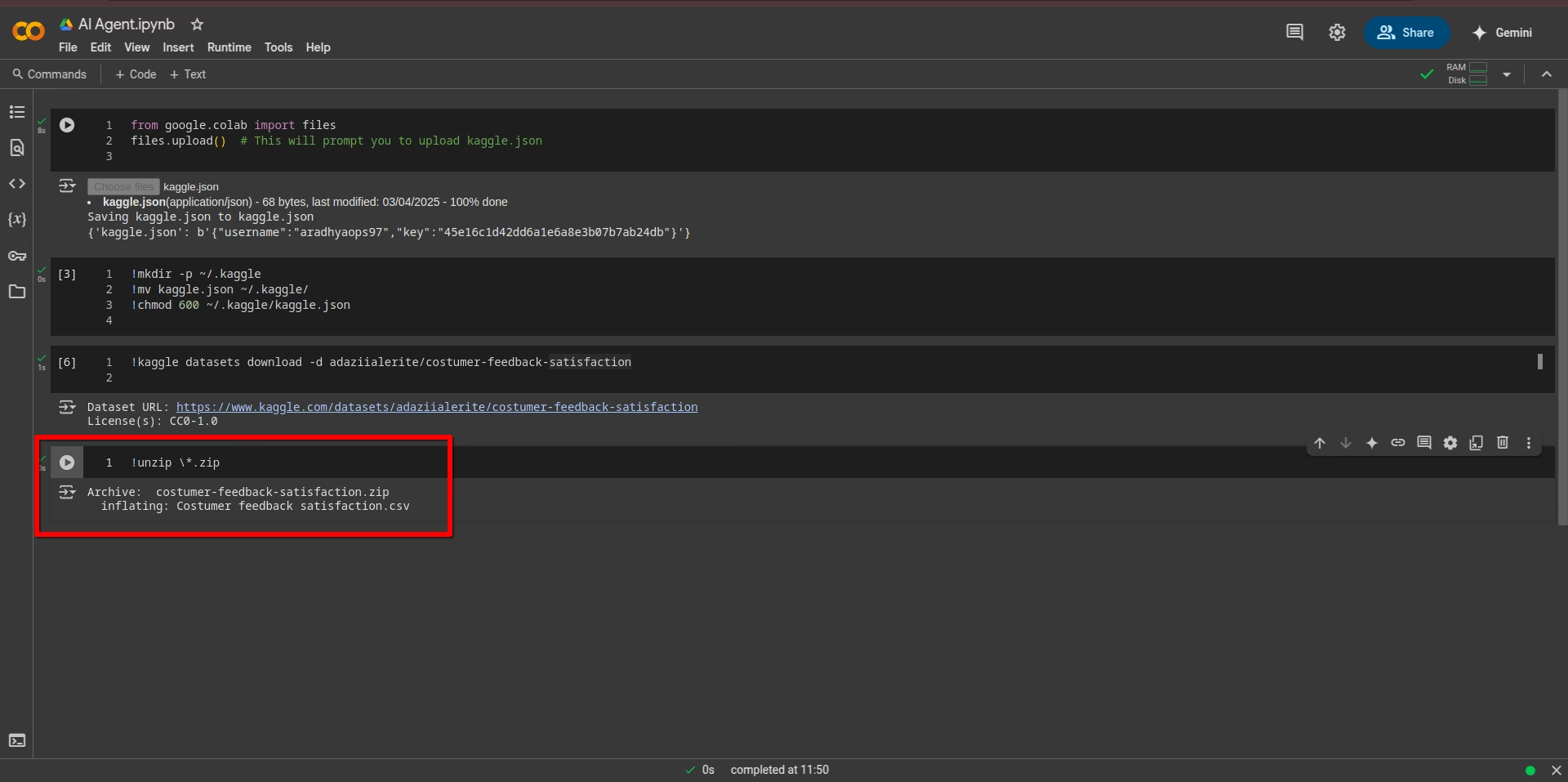

Now once it is downloaded as above image, unzip the file :

Now load it using the pandas library :

import pandas as pd

df = pd.read_csv("Costumer feedback satisfaction.csv")

df.head()

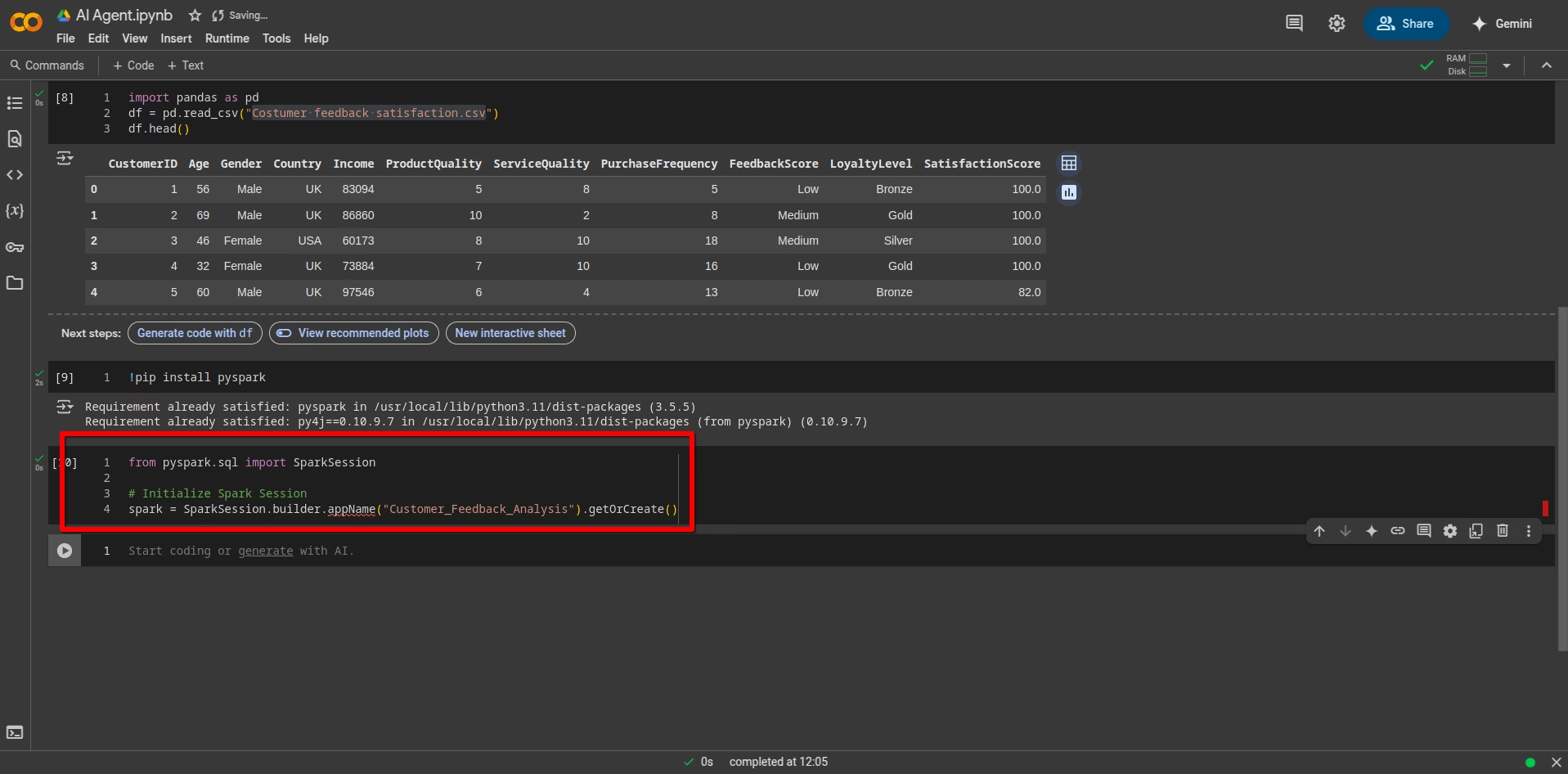

Step 2 : Data Ingestion and Loading

Import PySpark and initialize a Spark session:

from pyspark.sql import SparkSession

# Initialize Spark Session

spark = SparkSession.builder.appName("Customer_Feedback_Analysis").getOrCreate()

Load the Customer Feedback Dataset in PySpark :

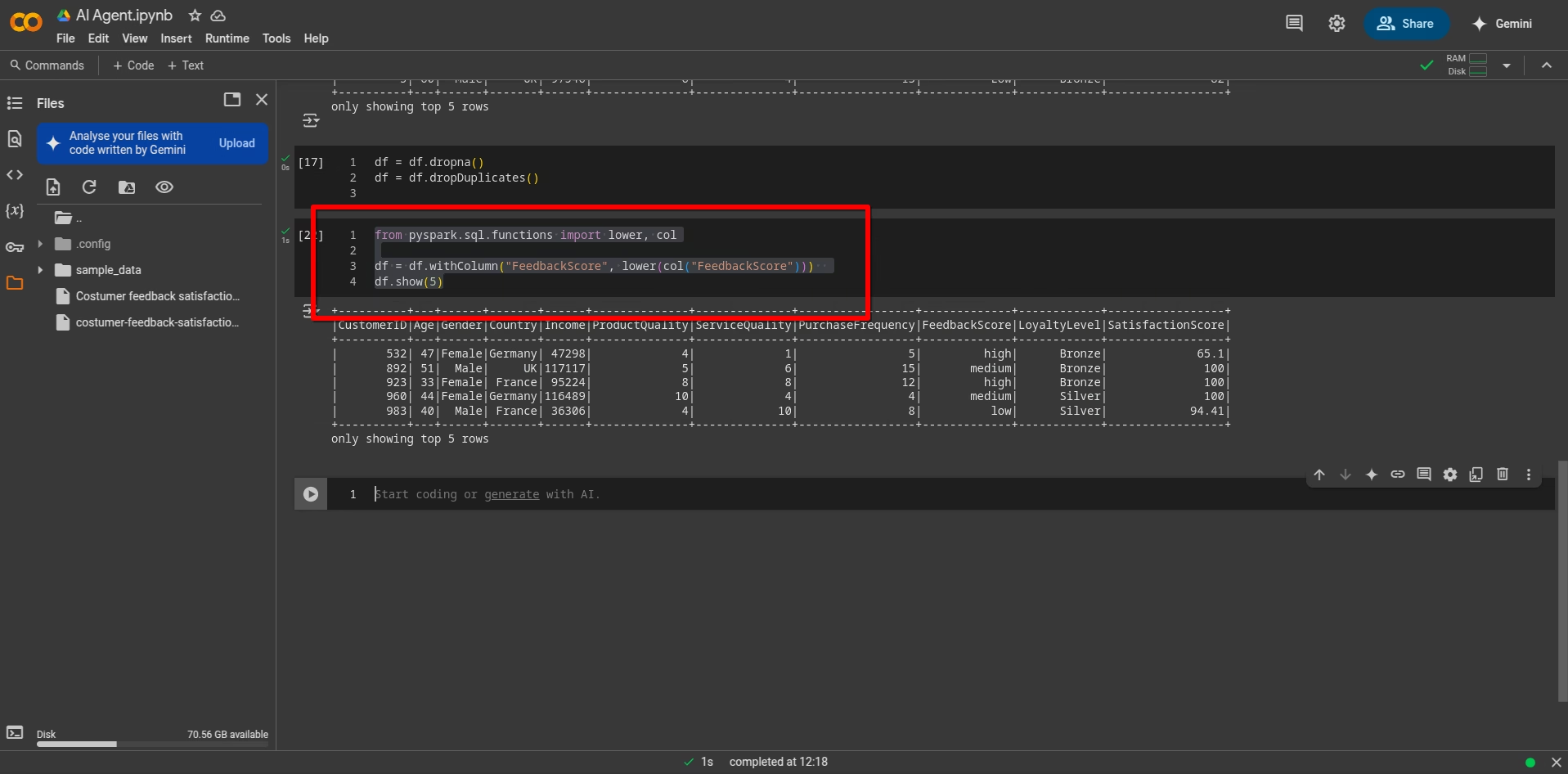

Handling missing values and converting to lowercase :

df = df.dropna()

df = df.dropDuplicates()

from pyspark.sql.functions import lower, col

df = df.withColumn("FeedbackScore", lower(col("FeedbackScore")))

df.show(5)

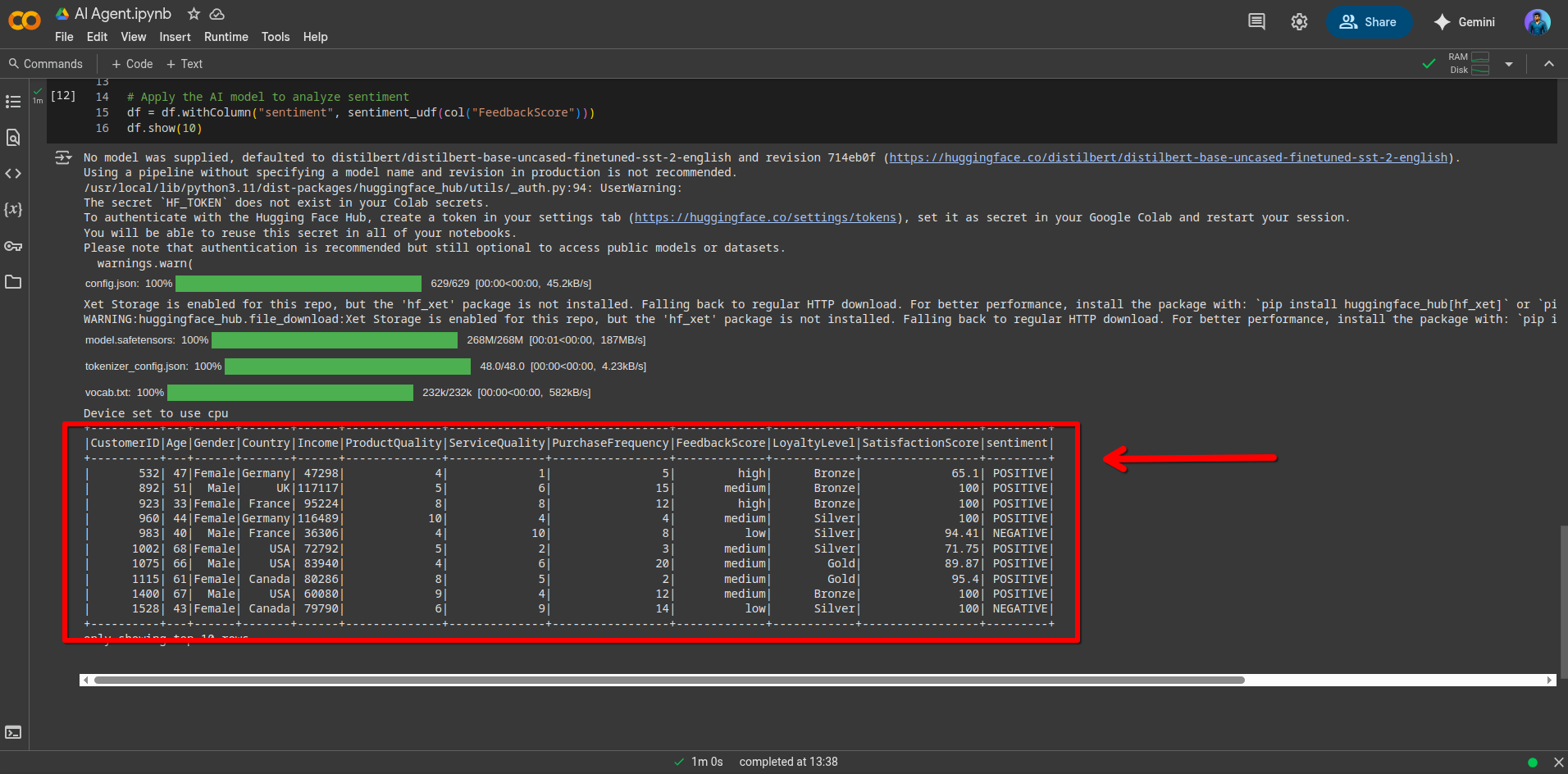

Step 3 : Perform Sentiment Analysis using an AI Model

from transformers import pipeline

from pyspark.sql.functions import udf

from pyspark.sql.types import StringType

# Load a pre-trained sentiment analysis model

sentiment_model = pipeline("sentiment-analysis")

# Define a UDF (User Defined Function) for Spark

def analyze_sentiment(text):

return sentiment_model(text)[0]['label']

sentiment_udf = udf(analyze_sentiment, StringType())

# Apply the AI model to analyze sentiment

df = df.withColumn("sentiment", sentiment_udf(col("FeedbackScore")))

df.show(10)

Step 4 : Aggregate and Generate Insights

from pyspark.sql.functions import count

df_summary = df.groupBy("sentiment").agg(count("FeedbackScore").alias("count"))

df_summary.show()either you can use above command or if it takes longer executions then use the following command :

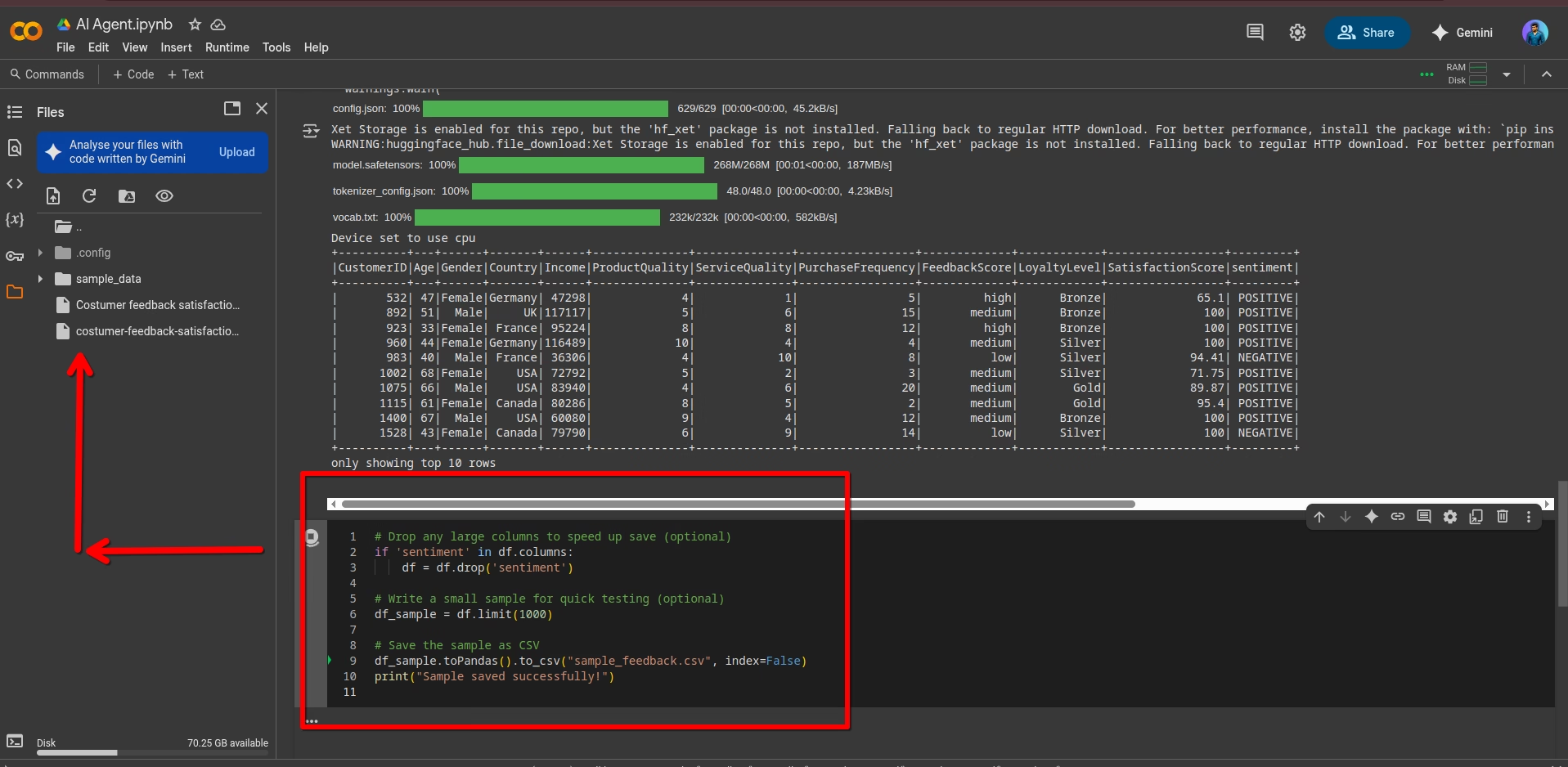

# Drop any large columns to speed up save (optional)

if 'sentiment' in df.columns:

df = df.drop('sentiment')

# Write a small sample for quick testing (optional)

df_sample = df.limit(1000)

# Save the sample as CSV

df_sample.toPandas().to_csv("sample_feedback.csv", index=False)

print("Sample saved successfully!")

7. How to Scale & Optimize AI Agents in Big Data Environments ?

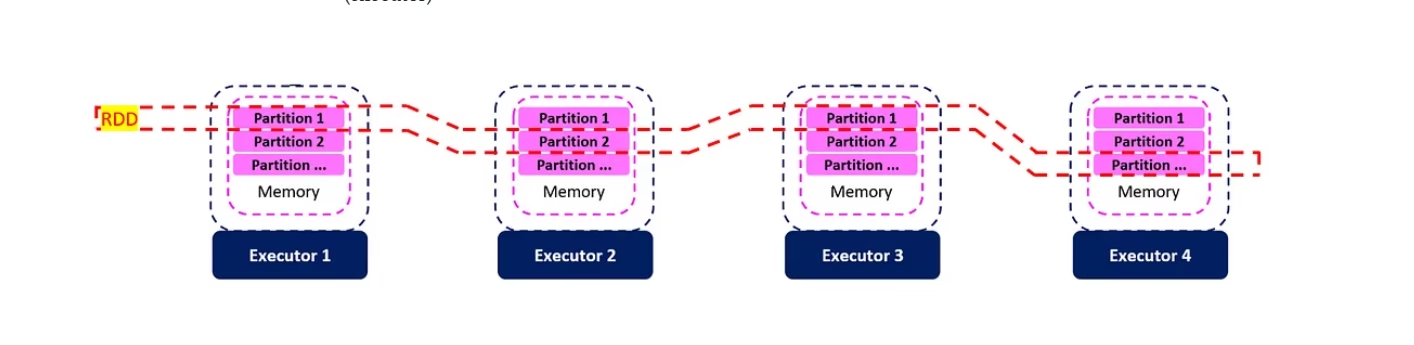

PySpark Partitioning Strategies

Look at this diagram of PySpark Partitioning ( Source : Link Medium )

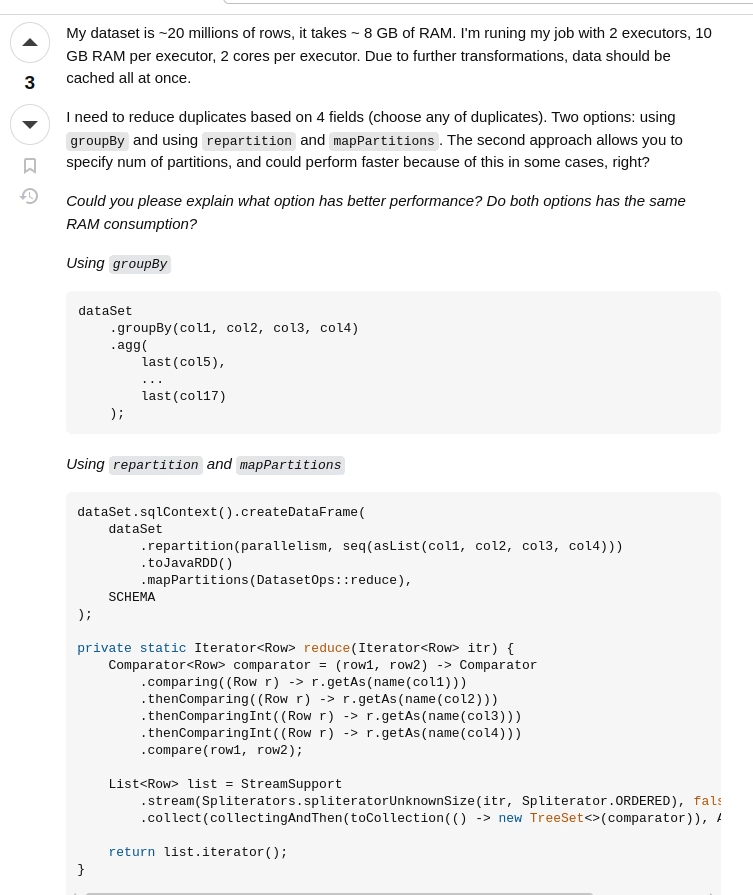

Avoiding Wide Transformations

Below is the image of a question asked on StackOverflow

Spark groupBy vs repartition plus mapPartitions

Description : This visual compares the groupBy operation, which can cause extensive shuffling, with mapPartitions, which processes data within existing partitions, reducing shuffle overhead.

Caching and Checkpointing in Spark

Description : This diagram delineates the differences between caching and checkpointing mechanisms in Spark, highlighting their respective use cases in iterative algorithms.

Visit here : Spark Concepts Simplified: Cache, Persist, and Checkpoint

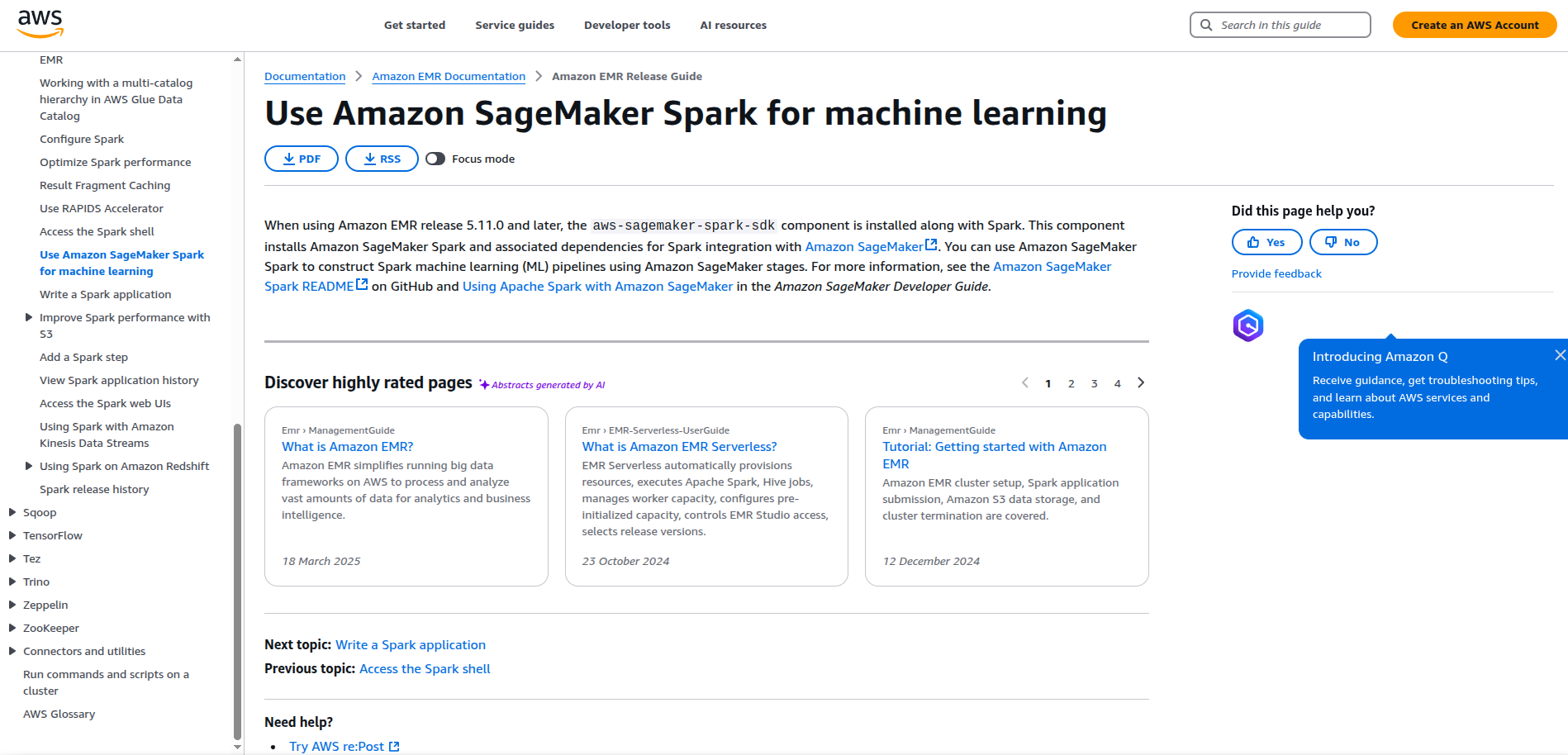

AWS EMR with SageMaker Integration

This architecture diagram showcases the integration of Amazon EMR with SageMaker, facilitating the construction of Spark ML pipelines using SageMaker stages.

GCP Dataproc and Vertex AI Integration

Google Cloud Provider gives seamless integration with Vertex AI

Description : This screenshot guides users through the process of creating a Vertex AI Workbench instance integrated with Dataproc, enabling seamless machine learning workflows. [ GUIDE ]

Azure Synapse and Azure ML Pipelines

Description : This workflow diagram illustrates how to orchestrate data engineering and data science practices by integrating Azure Synapse with Azure Machine Learning pipelines. [ GUIDE ]

9. Deployment & Production Considerations

Running AI Powered PySpark Pipelines in Production

- Package the workflow using Docker + Spark Submit.

- Use Airflow or Apache Beam for pipeline orchestration.

- Store pipeline configs and agent logic in JSON/YAML to allow agent retraining/config tuning without code changes

Monitoring and Logging AI decisions

- Track decisions, context relevance, and model confidence scores via structured logs (e.g., JSON logging).

- Use OpenTelemetry or Prometheus + Grafana for distributed system metrics.

- For RAG-based agents, log top-k retrieved chunks and final output to understand generation quality.

Debugging and Performance Tuning

- Use Spark UI to monitor slow stages and memory usage.

- Profile UDFs and model inference layers using

cProfileorline_profiler. - Parallelize slow parts using Ray or Dask, especially if Spark overhead becomes too high for agent interaction.

10. Conclusion & Next Steps

Summary of what we learn

- Agentic AI can integrate effectively with PySpark to enable autonomous decision-making at big data scale.

- Proper setup, efficient communication, and batching inference are key to reducing bottlenecks.

- Distributed computing can empower agents to process large-scale data without compromising autonomy.

Potential Enhancements & Future Research Directions

- Investigate multi-agent coordination protocols using Pub/Sub systems.

- Benchmark real-time inference + streaming using Spark Structured Streaming + lightweight transformer variants.

- Explore integration with Data Lakehouses like Delta Lake or Apache Iceberg for persistent agent memory.

Additional Learning Resources

- Hugging Face Transformers Documentation [ CLICK HERE ]

- PySpark Official Documentation [ CLICK HERE ]

- Google’s Agentic AI Framework Research Papers [ CLICK HERE ]

- Data Bricks guide to PySpark Performance Tuning [ CLICK HERE ]

For Bonus Material if you want to know about how RAG Automation works kindly visit our previous article :